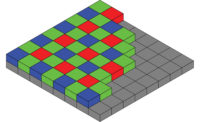

A set of standard, ceramic color patches used to calibrate a color machine vision system. Source: Ceram Research Ltd.

Making reliable color measurements is difficult because our color perception is influenced by factors such as the size and surroundings of the color area being measured, the illumination spectrum, adaptations, age, experience, fatigue and genetic differences. Machine vision systems make reliable color measurements and so are replacing human vision in monitoring product color. A machine vision system can be calibrated to a standard based on human vision or it can be used to compare product colors to reference colors without calibration. Here we’ll discuss how human color perception is measured, how a machine vision system is calibrated to these measures of human perception, and the advantages of “comparative” color methods for monitoring product colors.

Comparative color monitoring assures that the right color (magenta or green) mouthwash is put in the right product bottle. Source: Teledyne DALSA Industrial Products Inc.

Measuring Human Color Perception

Our eyes have, if you are not color blind, three types of color photoreceptors with different spectral responses. The receptor types are called Long-, Medium- and Short- (LMS) based on their peak spectral responses, roughly 570, 545, and 430 nanometers (nm). The receptor responses are primaries that define a 3-D physical color space, with the primaries as the color space’s axes. Each color is a combination of one or more of the primaries and is represented by a point in the color space.The photoreceptor’s outputs are processed in the eye and brain to give our perceptions of color. This processing adjusts our color perception for changes in the environment. It helps us find and identify objects by their color in different lighting and settings. For example, a ripe banana looks about the same shade of yellow outdoors in sunlight and indoors under fluorescent light.

To get stable measures of human color perception, we must “factor out” this processing. So we present observers with carefully controlled color patches of a specific size and in uniform, controlled surroundings. Using many comparisons of color patches we can map a perceptual color space in which each perceived color is a point. The CIE XYZ perceptual color space is derived from and spans the LMS primaries. The XYZ color space can be converted (mapped) into the CIE L*a*b* color space which is more perceptually uniform than the XYZ color space. In a perceptually uniform color space the minimum detectable change in color is the same distance throughout the color space. Paints, pigments, and colorants are often specified in L*a*b* space.

Vegetables’ colors are plotted in a red, green and blue physical color space (right). Source: Teledyne DALSA Industrial Products Inc.

Calibrating Machine Vision to Human Color Vision

If product colors are specified in perceptual color spaces, then we have to calibrate the machine vision system’s physical color space values to the specified perceptual space values. To do this we will try to find a calibration formula that converts the camera’s color space to, say, XYZ color space. First, consider a colorimeter, a pre-calibrated instrument for making color measurements.A colorimeter uses a light source with known spectral distribution and has three sensors with filters that give spectral responses similar to human LMS responses. From these responses XYZ or L*a*b* perceptual color measures are computed. A colorimeter averages color over a small field of view and is usually in contact with the object being measured so as to block out any stray light.

A colorimeter is easy to use but is impractical for most machine vision applications. The color averaging erases small color patterns or defects, we generally can’t touch the object being measured, and scanning a colorimeter over the object takes too much time. So colorimeters are used for “spot checking” object’s colors and for calibrating color machine vision systems.

An instrument’s color signals are the integrated (over wavelength) product of the illumination, the object reflectance, and the photoreceptors’ responses. We want the object’s color reflectance, independent of the illumination and photoreceptor responses. A colorimeter does this by controlling illumination and photoreceptor responses, but these factors are not well controlled in most machine vision systems.

In a color machine vision system, colored filters are used to make photoreceptors with peak sensitivities in the red, green and blue (RGB) parts of the spectrum to form a physical color space similar to the LMS color space. The RGB values are processed both in-camera and post-camera (in the machine vision system’s computer) to transform them into, say, XYZ values.

To reduce cost, most color cameras use a mosaic of red, green and blue color filters over the photoreceptors (pixels) and interpolate neighboring color values to give RGB values at each pixel. This interpolation causes artifacts-wrong colors-in the image, particularly at transitions between colored areas. If you are monitoring densely textured objects such as cloth, you might need to use a more expensive camera that uses three sensor arrays and no interpolation.

Next, let’s look at typical in-camera processing. The amplitude and range of the photoreceptor signals are set using gain and offset controls. The signals are then digitized with 10- to 12-bit resolution even though the camera output is usually 8-bit resolution. The extra resolution in the camera helps reduce round-off errors and clipping in the in-camera processing.

Gamma correction compresses the image intensity range, but I don’t recommend using this for machine vision. Interpolation fills in the color components missing from each pixel due to the color filter mosaic. Three look-up tables (function tables) adjust the intensity responses of the red, green and blue channels-the separate red, green and blue images. A 3 x 3 matrix multiplier can convert the RGB values to another, linear color space. Post-camera processing can be more accurate than in-camera processing and include additional color space conversion, but at the expense of computation time.

For calibration, we want a formula that takes RGB values and generates closely corresponding XYZ values. This formula may be implemented by in-camera or post-camera processing, or both. The machine vision system views a color calibration chart containing color patches (including gray patches) with known XYZ values and we measure the system’s RGB outputs for each of these color standard patches. We use these measurement pairs (RGB and XYZ) to set the formula’s parameters to minimize the error between all measurement pairs. This minimization can be automated.

If the vision system was linear and the viewing conditions, including the illumination, matched the conditions under which XYZ space was defined, then calibration would be easy. Trial and error or automated minimization would smoothly converge to near-optimal parameters. Unfortunately, this is not the case and the resulting formula parameters are not optimal. Reducing the error for one measurement pair often increases the error for others, rather than reducing the overall error.

This is not to say that you can’t calibrate a color machine vision system, only that the precision of the calibration might be less than you would like and the effort might be more than you want. In a moment we will turn to comparative methods that can do many color monitoring tasks and don’t require calibration. I’ll finish this section with some things that mess up color calibration.

The object’s reflectance spectrum is multiplied by the illumination spectrum, making it difficult to recover the color of the object. As an example, the spectrum of “white” LEDs decreases to 10% around 475 nm, making blue-green colors very dark. The vision system then might have trouble deciding what color any blue-green parts are. A more vexing problem for calibration is that the illumination is usually not uniform across the image. Calibration to gray or color levels in one part of the image might not work on another part of the image. There are ways to divide or subtract out illumination non-uniformity, but this is generally not implemented in machine vision area-scan cameras, although it is in some line-scan cameras.

The gamut-the range of colors a device can measure or represent-of an RGB camera is different from LMS. This means that there may be colors that we can see but that the camera can’t, and vice versa. So calibration might be difficult because you are trying to match two different “shaped” color spaces.

Photoreceptor responses differ from camera to camera. You can’t calibrate one camera and use the same calibration parameters in other cameras. If a camera fails in the field, you will probably have to calibrate the replacement camera. I have mentioned the color artifacts generated by the interpolation of the camera’s color filter mosaic and the solution of using a three-sensor camera.

The in-camera processing has limited resolution that causes an increase in image noise. The limited range can sometimes clip a color space conversion. Both of these issues can be made to go away by using post-camera, higher-resolution processing-but at the expense of increased computation time.

Comparative Color Methods

In many cases you want to monitor a process’s colors rather than measure them in a standard color space. If so, calibration is not needed. You simply compare average RGB values from the object to average RGB values from reference colors, and signal when object colors are “out of range” of the reference colors. There is also no need for color space transformations or reporting results in a standard color space-you always work with RGB values.If product requirements are in, say, XYZ, you can use a colorimeter to measure the reference colors then set comparisons and tolerances around the RGB values generated by those reference colors. The danger is that if the shape and measure of the standard space, say, L*a*b*, is different enough from RGB, you cannot accurately set limits or separate close colors in RGB space. Comparative color methods therefore work best when the reference colors are well separated in color space (like magenta and green mouthwashes) and product color tolerances are not tight.

Here are two final suggestions on using machine vision to monitor product color. First, if you can, include a reference patch in the camera’s field of view. This patch could be a small, ceramic tile with a matte, white finish. The patch reflects the illumination spectrum without significantly changing it, and the vision system can then measure the RGB values from that patch for white balance-adjusting the R, G and B channel gains and offsets so the reference patch appears white (equal R, G and B values). This can be used to partially correct for a poor illumination spectrum and shifts in the illumination spectrum over time.

Second, a color camera’s responses shift with temperature, possibly invalidating calibrated or comparative measures. Consider mounting the camera in a temperature controlled enclosure if the ambient temperature fluctuates by more than about 5 degrees C.V&S

Tech Tips

The receptor types are called Long-, Medium- and Short-based.LMS is based on peak spectral responses, roughly 570, 545, and 430 nanometers (nm).

An object’s reflectance spectrum is multiplied by the illumination spectrum, for example, the spectrum of “white” LEDs decreases to 10% around 475 nm, making blue-green colors very dark.