BASICS OF MACHINE VISION OPTICS

RESOLUTION

| In the real world, diffraction, sometimes called lens blur, reduces the contrast at high spatial frequencies, setting a lower limit on image spot size. |

Figure 3 shows a spark plug being imaged on two sensors with different levels of resolution. Each cell in the grid on the image represents one pixel. The resolution in the image on the left with a 0.5 megapixel sensor is not sufficient to distinguish characteristics such as spacing, scratches or bends in the features of interest. The image on the right with a 2.5 megapixel sensor provides the ability to discern details in the features of interest.

CONTRAST

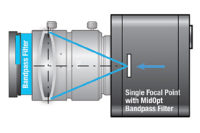

DIFFRACTION

DEPTH OF FIELD

DISTORTION

CONCLUSION

Tech Tips

|