Morphology in its broadest definition is the study of shapes, and can apply to studying the shapes of animals or other organisms in biology, artifacts in archaeology or galaxies in astronomy. This article will focus on mathematical morphology which is a set of operations for modifying, classifying, and measuring object shapes as seen in images. Throughout this article, the term morphology means mathematical morphology.

Morphology is used in machine vision for tasks such as separating touching objects in an image, removal of unwanted image structure and noise, and classifying objects by their shapes.

To simplify the mathematics, I’ll describe fundamental morphological operations as algorithms–the steps your machine vision system takes to perform the operation. In the references, Dougherty’s books provide a rigorous but understandable introduction and Serra’s text is a challenging classic.

Kernels and Forming Elements

In linear image filtering, a small matrix called a kernel specifies operations such as “smoothing” or “sharpening” the image. The kernel is moved across the input image and, at each input image location, pixel values “under” the kernel are multiplied by corresponding kernel value and the result is summed to give an output pixel value.

Morphological operations are usually non-linear. Instead of a kernel, we use a structuring or forming element to change the structure (or form) of the imaged objects. The forming element is a matrix that tells which pixels to use in the operation. As with linear filtering, the forming element is moved across the input image and, at each input image location, the output is some function of the pixels chosen by the forming element.

Binary Morphology

Binary morphology operates on binary images. Let’s assign 1 (white) to objects and 0 (black) to background. Pixels selected by the forming element are input to a logic operation and the resulting binary value is output to another image. The erosion operation takes the logical AND of pixels selected by the forming element, and has the effect of removing object (white) pixels from the edges of the object. The dilation operation takes the logical OR of pixels selected by the forming element and so adds white pixels to the edges of objects. If our objects are black on a white background, then the sense of these operations is reversed.

Another way to look at the erosion and dilation operations is to think of the forming element as a shape that “probes” objects. For erosion, a 1 (white) output occurs only when the probe fits entirely within the input object shape, otherwise a 0 (black) is output. For dilation, a 1 is output occurs if any part of the probe is in the object.

Small, symmetric forming elements, such as a 3x3 pixel square, are typically used. To get the effect of larger forming elements, the operation is repeated – the output image is again dilated or eroded into another image. Using a 3x3 forming element, the effective size is 2n+1, where n is the number of repetitions. For example, for 9x9 erosion the image is eroded four times by a 3x3 forming element.

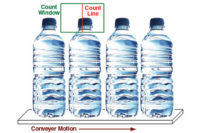

Erosion is often used to separate touching objects so we can count them. For this to work, touching edge length has to be smaller than the radius of either object, so objects are not eroded into nothing. So this works well with nearly round objects such as round pills.

Erosion followed by dilation is the opening operation. Opening can remove small, white spots in the image background, perhaps due to noise. It removes spikes on objects or breaks long, thin connections between objects. Dilation followed by erosion is the closing operation. Closing can be used to remove small, black spots within a white object. It will remove small indents on white objects and connect closely spaced objects. Another way to view opening and closing is that they smooth convexities or concavities, respectively, in the object’s perimeter. I use the mnemonics EDO and DEC to remember the order of erosion and dilation to make opening and closing.

Opening and closing are idempotent operations–successive applications of the same operation do not change objects’ shapes. Erosion and dilation are idempotent only in the extreme cases where objects have been entirely eroded into a black image or dilated to a full white image. An opening followed by a closing can be used as a non-linear smoothing and noise reduction filter.

Gray-Scale and Flat-Top Morphology

Gray-scale morphology operates on gray-scale images and uses maximum and minimum operations rather than logic operations. For dilation each value in the forming element is added to the pixel “under” it and the maximum of these sums is output. For erosion the forming element values are subtracted from the pixel values and the minimum difference is returned. Gray-scale morphology can be thought of as using a three-dimensional forming element to probe the shape of the surface made by image intensities.

In Flat-top morphology the forming element values are, effectively, ignored so only the maximum (for dilation) or minimum (for erosion) of pixel values “under” the forming element is output. Flat-top morphology is commonly used in machine vision.

As with binary morphology, gray-scale erosion and dilation are combined for opening and closing. A threshold can be applied after gray-scale morphology to separate objects from background, for example to locate and count objects.

A Challenging Application

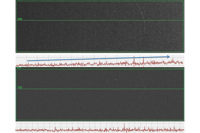

We’ve discussed separating touching objects using erosion or dilation, and using opening and closing to reduce image noise or texture. Let’s try a difficult problem – finding some small, diffuse, low-contrast, dark defects within an image with extremes of lighting. These defects look like dark blobs above the bright area in the “Original” image. Setting a threshold to detect them reliably is impossible. We need to use the defect shape in addition to intensity to find a few of these defects. We need morphology!

We will make a filter for small, dark blobs. First use a 31x31 closing. Closing first dilates the image to remove the dark blobs, filling them in with the values of brighter, neighboring areas. Then erosion trims back the enlarged bright areas to give us the original image without the dark blobs.

Next subtract the original image from the closed image. As the closed and original images are different only where the dark blobs are, we get an image with bright spots only where the dark blobs were. We have built a filter that detects dark objects smaller than our closing size of 31x31 pixels. This type of filter is known as a bottom-hat filter, perhaps because its outputs look like stove-pipe hats (like Abraham Lincoln’s) on a flat background. The dual for this filter is a top-hat filter that passes only bright objects smaller than the filter size. The top-hat filter subtracts the opening of the original image from the original image. Both can be thought of as “high-pass” filters with a “cutoff” determined by the forming element size. Both filters are useful when detecting small objects on a background with intensity gradients.

Unfortunately, after the bottom-hat filter the image has fuzzy blobs and scattered bright pixels. We next use a variance-based filter (Roughness in Teledyne DALSA’s Sherlock machine vision software), which outputs image intensity variance for each image area. Fuzzy blobs have high variance and so give a strong output signal but scattered bright pixels have low variance and so low output.

Last we threshold the variance image to give the image labeled “Bottom Hat”. This algorithm tolerates changes in lighting intensity and distribution. A measure of robustness is the range of threshold values that work; in this case about 12 threshold steps – not outstanding but good enough. Only a few dark blobs are detected, but this meets the customer’s requirements.

Some Cautions

As morphology changes the shape of imaged objects, it is often not appropriate when your goal is precision metrology, for example edge-to-edge caliper measures. You might get some improvement from opening or closing for noise reduction before measurement, but be careful.

The forming element can’t probe into where objects touch image edges. For example, with a 3x3 forming element a border one pixel wide can’t be probed. The existing border values will appear in the output image, or could be set to a constant value. So make sure your objects are far enough away from the image border so that these “edge effects” won’t be a problem.

We’ve discussed a few fundamental morphology operations to guide your practical use of morphology. There are many other operations – watershed, geodesic, hit-or-miss, etc. – used to solve image analysis and machine vision problems.

References:

- http://en.wikipedia.org/wiki/Mathematical_morphology

- An Introduction to Morphological Image Processing by Edward R. Dougherty. Volume TT9, SPIE Press, Bellingham, Washington, USA (1992). ISBN 0-8194-0845-X

- Hands-on Morphological Image Processing by Edward R. Dougherty and Roberto A. Lotufo. Volume TT59, SPIE Press, Bellingham, Washington, USA (2003). ISBN 0-8194-4720-X

- Image Analysis and Mathematical Morphology, by J. Serra. Academic Press, London (1982).

- To experiment with morphology, get a trial copy of Teledyne DALSA’s Sherlock machine vision software at: http://www.teledynedalsa.com/imaging/products/software/sherlock/evaluation/

- The images in this article were made using Sherlock and so are courtesy of Teledyne DALSA.