Technology development moves at a dizzying pace. Check the newswire, and you’ll find a list of new products that leapfrog what was heralded as the “latest and greatest” just months ago.

The machine vision industry is no exception, and specific to imaging there is consistent innovation aimed at improving efficiencies, costs, and intelligence. Over the past 12 months, for example, new USB3 Vision products have driven the design of higher bandwidth, plug-and-play solutions for imaging applications.

TECH TIPSThe need for speed is often the driving factor behind new technologies. In machine vision, advances in USB3, 10 GigE, and wireless will afford designers more bandwidth and system design flexibility. Although USB3 Vision is now just entering mainstream design, enhancements are already around the corner with the USB 3.1 standard. |

What’s next for machine vision? Here are five trends that we expect will influence imaging system design.

Moving Towards “Off-the-Shelf”

There has been an increasing move towards repurposing technologies perfected for the larger IT and consumer markets to help increase efficiencies and lower cost in vision applications. GigE Vision borrowed from IT connectivity standards to supplant proprietary connectivity and legacy solutions like analog, Camera Link Base, and LVDS. More recently, USB3 Vision leverages transportation and connector capabilities—developed primarily for consumer electronics and computer connectivity—to enable vision systems that are easier to use, maintain, and upgrade.

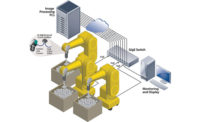

In a bin picking robot (Diagram 1), an external frame grabber converts an existing Camera Link camera into a USB3 Vision camera. The uncompressed video—along with power and control—is transmitted with low, consistent latency over the USB 3.0 cable directly to an existing port on a computer used for analysis and display. The thinner, lighter USB 3.0 cable is more flexible to enable a wider range of motion for robotic applications, and easier routing allows faster setup and teardown of work cells for short production runs.

For designers and manufacturers, employing off-the-shelf technologies in favor of DIY or industry-specific solutions is a key way to decrease costs and improve usability.

Away from Desktops

Across all markets there is a well-documented move away from desktop PCs in favor of laptops and tablets. Computing platform choice has been one of the driving factors behind the continuing growth of GigE Vision, and the fast emergence of USB3 Vision solutions.

With both standards, imaging data is streamed directly to existing Ethernet or USB 3.0 ports on any computing platform. In comparison, legacy interface standards require a PCIe frame grabber to capture imaging data at the endpoint, limiting systems primarily to desktop PCs. In general, computer manufacturers appear to be migrating towards USB 3.0 as the interface of choice, with FireWire disappearing and Thunderbolt adoption moderate at best.

Beyond PCs and laptops, there’s also a shift towards embedded systems in applications where imaging tasks or processes are automated and repeated. The very high processing capabilities and extremely small form factor of embedded processing makes it very attractive for image and video processing for industrial robotics applications. With embedded processing, vision system intelligence can be more easily located at different points in the network. In addition, power efficiencies help lower operating costs and reduce heat output to prevent the premature failure of other electronic components.

Faster Transmission

The need for speed is often the driving factor behind new technologies. In machine vision, advances in USB3, 10 GigE, and wireless will afford designers more bandwidth and system design flexibility.

Although USB3 Vision is now just entering mainstream design, enhancements are already around the corner with the USB 3.1 standard. With more than double the data rate of USB 3.0, USB 3.1 provides the bandwidth required to support all variants of Camera Link. Delivering up to 100 watts of power on the cable, designers can further cost-reduce vision systems by eliminating external power requirements for cameras. The existing USB3 Vision standard will support these performance improvements with little or no modification.

There is also ramping adoption of 10 GigE for vision applications, driven in part by a continuing decrease in the cost of 10 GigE infrastructure, including switches, NICS, cabling, and SFP+ modules. A key market for 10 GigE will be high-speed web inspection, where designers can take advantage of Ethernet’s inherent multicasting capabilities, in combination with lower-cost off-the-shelf equipment, to build fully networked vision systems that enable distributed processing.

As illustrated in Diagram 2, an external frame grabber converts Camera Link Medium or Full camera into 10 GigE and transmits image data to multiple computing platforms using an off-the-shelf GigE switch. This allows designers to optimize individual PCs for different types of defects, rather than mating a PC to each individual camera. Ethernet’s long cable reach allows system designers to move the processing computers off the gantry and away from the harsh climate on the inspection floor.

Imaging system designers are also beginning to realize the opportunity for wireless video transmission. Video interface solutions are now available that support 150 Mb/s over an IEEE 802.11n wireless link, with higher bandwidth IEEE 802.11ac products on the horizon. Next-generation wireless video solutions could support three GigE Vision cameras running at VGA resolutions, at 30 frames per second (fps), in monochrome or Bayer color. There is also the potential to build on the Wireless Home Digital Interface (WHDI), intended primarily for wireless HDTV connectivity in the home, for short-range vision applications.

Towards Parallel Computing

Increasingly, FPGAs and CPUs are being replaced by GPUs to perform some imaging tasks. GPUs are well suited for imaging tasks that can be broken into sub-tasks and performed in parallel, and many image analysis software packages—both commercial and open source—leverage GPUs to speed processing.

GPUs are available on most laptop and desktop motherboards, either in a standalone chip or built into the processor chipset. Historically, GPUs have been difficult to program, but as more modern toolchains have evolved GPU programming has become more akin to programming a CPU using standard C++. For most vision applications, the time-to-market benefits of a GPU-based system easily outweigh minor tradeoffs in latency, jitter, and power.

Compression

Compression represents a new opportunity for manufacturers to design more economical, easier to use multi-screen imaging systems. In many vision applications, the uncompressed video used for processing and analysis can also be delivered to live operators for monitoring, security or scheduling. Sending uncompressed video from dozens or hundreds of cameras to a centralized operations center is not practical. Instead, the video can be compressed and sent across a wired or wireless network.

There are two types of compression that designers should consider for imaging applications. Lossy compression, also called “unrecoverable,” loses some of the information and clarity of the original image. H.264, popular for DVDs and Internet video streaming, is an example of lossy compression. It does an excellent job at reducing data size, with compression ratios of 50:1 or higher, but incurs high latency. The loss of image quality is unperceivable to the human eye, and the few seconds of delay has no impact when remotely viewing or recording the image stream.

This type of compression is ideal for vision applications with multiple end-users. For example, on a manufacturing line uncompressed video from a GigE Vision camera can be transmitted to processing and analysis platforms. The same video can be multicast to a transcoder gateway, which compresses it using H.264 and transmits the video to an operations center. There, operators can remotely monitor the facility and key events can be recorded for later analysis.

Recoverable or mathematically lossless compression techniques, like JPEG-LS or certain modes of JPEG 2000, have much lower compression ratios, but all the original information is preserved. In addition, latency is much lower with this “light compression.”

This makes recoverable compression suitable for systems where low latency is required, but the data rate needs to be higher than what can be supported by the existing cabling. For example, 1080p color video can be streamed over a GigE cable, or Camera Link Full video can be transmitted over a USB 3.0 cable. As a result, designers can increase system functionality by delivering higher resolution images over less expensive, easier to use cabling.

What’s Next?

Admittedly, it’s difficult to predict technology trends. One guarantee is that the machine vision industry will continue to evolve, and the winning technologies will be the ones that deliver cost, performance and reliability benefits.