The counting of objects or parts is—one should think—no big challenge for image processing any more. For such applications to count objects, special attention is given to the suitable illumination, so that the contours of the object are clearly visible and to create a dividing line between the objects. Nevertheless sometimes it takes a particular skill or in the current case a combination of visual filters, which eventually make it possible to flawlessly count objects which are similar in shape and color and in addition touch each other.

TECH TIPSSometimes it takes a particular skill to make it possible to flawlessly count objects that are similar in shape and color and also touch each other. The first challenge is the search for the right illumination or to get a high-contrast image. There are several options to separate objects such as screws or other objects that are identical in shape and color. |

The facts of the case are as follows: small screws, which are located on the x-coordinate on a belt conveyor. The screws are huddled close together and touch each other. Also their position on the y-coordinate can vary. Those screws should be counted while they are on the belt conveyor. This works for all types of objects, which have to be separated before they can be counted.

The first challenge is the search for the right illumination or to get a high-contrast image. Furthermore, the shadows that the objects cast should get eliminated as best as possible because if there is too much of a shadow left, the separation of the objects from the background and from each other can get blurred. So the best possible contour is needed for segmentation and finally for counting objects.

To achieve that, it is important to take further considerations into account. Due to the lens and illumination a separation between the screws on the belt conveyor can be generated. For that two options were thought of.

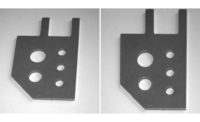

First, the screws (such as in the image) are brightly lit, while the belt conveyor should remain black. This option offers the advantage that the contour of the screws are highlighted better. The disadvantage is that also the crease of the screws’ threads’ surface are highlighted and therefore it will get difficult to separate them.

The second option is to illuminate the screws in such a way that black objects will lie on a white belt conveyor. In contrast to the first option, it is now possible to harmonize the surface of the creased thread, although the contour of the objects is less visible. And furthermore, shadows can emerge.

Although the contour of the objects is less visible, the second option is better.

To separate objects such as screws, or other objects used for engine construction, tool manufacturing, automotive engineering, etc. that are identical in shape and color, various morphological operations were taken into consideration.

The two basic operations, which are used in machine vision, are “dilation” and “erosion.” With the help of those two operations all other morphological operations can be realized. Both operations usually use a structuring element for probing and expanding the shapes contained in the input image.

The “dilation” and “erosion” operations are typically applied to binary images, but in this case we use it for our grayscale images.

Dilation adds pixels to the boundaries of objects in an image, while erosion removes pixels on object boundaries. The number of pixels added or removed from the objects in an image depends on the size and shape of the above mentioned structuring element used to process the image. It is this structuring element that determines the precise effect of the dilation or erosion on the input image. The smallest structuring element is 3 x 3 pixels.

In the morphological dilation and erosion operations, the state of any given pixel in the output image is determined by applying a rule to the corresponding pixel and its neighbors in the input image.

With the dilation operation the value of the output pixel is the maximum value of all the pixels in the input pixel’s neighborhood. With the erosion operation the value of the output pixel is the minimum value of all the pixels in the input pixel’s neighborhood.

For segmentation of the screws, one can use the “watershed” algorithms, which are morphological operations based on the above mentioned “dilation” and “erosion” operations. With the help of markers the image is “flooded” and where the “water” of two sources converge, a “watershed” is created. This watershed develops into a division line between the objects.

Here is the idea behind the watershed transformation: A grayscale image is interpreted as a topographical relief and in this digital image a regional minimum is assigned to each point. To achieve this flooding is simulated, where water pours out of the regional minima and fills the basins (the minima). The points are assigned to a minimum, by highlighting them with the corresponding number of the catchment basin. Due to the rising water level, zones of influence of the minima are calculated. If two basins converge, the points at the contact are marked as dams. Those dams are the watershed lines, which are possible object boarders.

But one should be careful. Often the use of the watershed transformation results in over-segmentation. This risk can be reduced by preprocessing methods. For reducing the over-segmentation afterwards, the regions can be merged together along special criteria.

As in our case the objects are segmented with homogeneous gray values, the watershed algorithms are used on a gradient image, by treating it as a surface where light pixels are high and dark pixels are low.

So, how to start?

We recommend a marker-controlled watershed segmentation. This means that the transformation works better if you can identify, or “mark,” foreground objects and background locations.

First, you need an image whose dark regions are the objects you are trying to separate. In this example, it would be the screws.

If you have a color image you will have to convert it into a grayscale image. Then read in the grayscale image.

Second, use a Gradient filter for the outline. The gradient is high at the borders of the screws and mostly low inside the objects.

Please notice that you cannot use the watershed transformation directly on the gradient image, as this usually results in “over-segmentation.”

Third, mark the foreground objects. There are a variety of procedures that could be applied here to find the foreground markers, which must be connected blobs of pixels inside each of the foreground objects. In our example we use “opening” and “closing” operations.

An “opening” is used for cutting the connection between two structures. This is achieved by using an erosion. As soon as the connection disappears in the image, you can use dilation to recover the original structures, this time without the connection.

Fourth, define the background markers. These are pixels that are not part of any object. We do not want the markers to be too close to the edges of the screws we are trying to segment. The background has to be “thin.”

Now we are ready to apply the watershed segmentation. The screws are separated and can be counted by a simple blob command in almost any machine vision software. In certain software, two images are necessary for carrying out the watershed transformation: the input image, preferably monochrome and an image with the markers, which have to be in different colors.