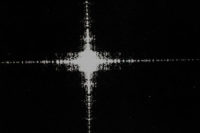

An integrated circuit on silicon without (right) and with (left) optical image processing before any digital processing is shown here. The defect can be easily detected in the area marked on left: the image intensity of the integrated circuit features has been significantly reduced so that there is a large signal-to-noise ratio between the light intensity from the defect compared with the light intensity from the circuit features. The high signal-to-noise ratio permits detection with reduced resolution. Source: Norman N. Axelrod Associates

In a vision system, light is collected from an object and made incident onto an array of photosensors in a video camera, for example, a charged-coupled device (CCD) or complementary metal-oxide semiconductor (CMOS), or a scanned analog photosensor surface in a video camera. All of the information from the object, contained in the light intensity variations, is in the light incident onto the photosensors.

Not only is information from the feature of interest contained in the light, but also light from possible other sources-reflected and scattered light, room and sunlight-that will interfere with the digital image processing of the photodetected signals. The smallest area of the photosensing surface that is accessible by the computer is a pixel. Each illuminated pixel provides a number associated with it that represents the intensity of light incident onto it.

Image processing is typically thought of as the digital image processing (DIP), by a computer, of an image in order to enhance the image or to extract information of interest. This DIP is done by a computer after the light has been incident onto the camera’s photosensitive surface. Optical image processing (OIP) is done on the light before the light strikes the photosensitive surface.

Source: Norman N. Axelrod Associates

Optical Image Processing

The simplest tools of OIP are familiar to all operators of still and video cameras. OIP is routinely done with handheld cameras using a suitable color filter, polarizing filter, numerical aperture, lighting angle or sensing direction. Appropriately used, these all can increase the signal-to-noise ratio or optical contrast by altering the light before the light strikes the photosensitive surface.In vision systems, OIP enhances the optical signal before the light reaches an electronic camera or photodetector, before electronic or digital signal processing, and before control decisions.

OIP uses variations in the light, due to changes in materials, structure and processing, to improve signal-to-noise or optical contrast. It produces signals from critical features only and suppresses background images. This has the obvious virtues of improving the imaging and possibly simplifying software and hardware. It also is fast.

Simple applications of more complex optical phenomena also are common to increase the signal-to-noise ratio. Some of these provide outstanding results without even forming an image.

For example, to gage a small diameter cylindrical object such as a wire or fiber, the diffraction pattern of a laser beam from very small radii object will provide simple information to gage the diameter. Even more remarkably, the smaller the diameter of the object is, the more spread out the diffraction pattern will be and the more accurate the measurement with no image will be.

Optical spatial filtering-or 2-D optical fourier transforms-has been used to eliminate background when the geometry of the defects is different from that of the background.

This has been used in defect detection on complex integrated circuits (on both photomasks and on silicon wafers) when the circuit images consist of straight edges. Since the optical fourier transform is the same as the Fraunhofer diffraction pattern, the straight edges provide a diffraction pattern perpendicular to the edges along and near the X and Y axis (perpendicular to the Y and X edges as with a single slit). The use of an opaque cross in the plane of the diffraction pattern results in the reformed image showing only the image of the irregularly shaped defects.

A seemingly remarkable feat is the use of optical spatial filtering to optically filter the image of a 2-D rectangular grid of perpendicular (vertical and horizontal) identical lines. The final image after filtering and reimaging contains only vertical or horizontal lines, depending on the optical filter orientation used. The filter, in the diffraction (or transform) plane, contains only a vertical or horizontal slit oriented perpendiculalry to the lines reimaged.

OIP is preferable to DIP if it can significantly improve the amount of information from the objects of interest (the signal) and/or decrease the amount of information from objects or sources that do not contain information on features of interest (the noise).

Digital Image Processing

DIP is done by computer after the light has been incident onto the camera’s photosensitive surface. After the light has been incident onto an array of photosensors (or photodiodes) as with a CCD or CMOS camera, all of the information is now contained in an array of numbers: each number corresponds to the intensity of the light that was incident onto that photodiode.So how can one get information from an array of numbers? A simple example is that of locating the edges of the image of a vertical wire. The numbers corresponding to the areas outside the wire will ideally be zero (black). The numbers corresponding to the areas inside the wire will ideally be 255 (white) Note that with a common 8-bit binary-intensity scale: 28 = 256 so that there are 256 levels from 0 to 255 including zero.

To detect the location of an edge of a vertical wire, the computer looks at the numbers representing pixel intensities in the image along a horizontal line through the wire. The computer then subtracts adjacent numbers. The difference of all adjacent numbers on the line inside the wire is zero. The difference of all adjacent numbers on the line outside the wire is zero. The difference of adjacent numbers on the horizontal line, when one number is from inside the wire and one number is outside the wire, will be 255. That is the location of the edge.

This method takes the mathematical derivative along the line, point by point. If the edge is not completely sharp, then the same approach will still work but there will be more non-zero differences between adjacent numbers. This new array can be used to systematically compute the location of the edge. The image processing that is used to detect a defect depends strongly on the properties of the defect and of the background behind the defect.

The simplest case is where the intensity of the defect is very high (say 156) and the intensity of the background is very low (say 34). Then, the computer can use a simple thresholding function: if the pixel intensity was initially greater than a chosen value of 60, then set all pixels to 255 (white); if the pixel intensity was initially less than 60, then set all pixels to 0 (black). This eliminates background signals. The resulting all-white pixels would then represent defects.

If the intensity of the defect is initially in the middle of the intensity numbers from the background, then the simple threshold operation by itself will not work. OIP often can be used to obtain high optical contrast between defects and background.

For example, use of near-infrared pass filters-eliminating visible light-for defects on sutures to sew up surgery on an eye has been used to change the signal-to-noise ratio from about 1 to about 7. The signal-to-noise ratio of 7 provided much more reliability against possible interferences than the signal-to-noise ratio of 1.

This simple threshold method depends on either the natural high optical contrast between defect and background, or the high optical contrast obtained by use of optical methods.

However, if the signal is smaller than the background noise optical signal (and the background is repeatable), then defect signals can be detected by subtracting a defect-free image from an image with a defect. For example, this method has been used to detect defects on magnetic memory disks for computers where the background signal is from repeatable scattered light from the disk.

OIP can be applied to information containing light before the light is incident onto the photosensitive surface of the video camera. DIP is then necessarily used to determine action for diagnostics, detection, recognition, gaging and other functions.

DIP is applied to the numerical-intensity pattern recorded by the photodiode array of the video camera. This can be used without OIP for diagnostics, detection, recognition, gaging and other functions.