Overview

Our eyes (being a “stereo imaging pair”) perceive visual data in three dimensions, and we use that data intuitively to make decisions. A machine vision camera, however, processing a planar, 2-D image is significantly more limited in the data available for decision making. Essentially a machine vision system with a 2-D image must rely exclusively on brightness, contrast and only X, Y and rotational position relative to the camera. It is very valuable when a machine vision application can take advantage of additional data like that available in a 3-D view, such as the height of an object or even the height of every point in the image. Difficult inspections that may require multiple cameras or precision part presentation may be simplified with the availability of 3-D data. Other applications such as automated guidance of motion and location or measurements of objects in 3-D space fundamentally require 3-D information.

Simply put then, the basic task for 3-D imaging in machine vision is to provide height information in a scene relative to a fixed or arbitrary plane. The height information is then processed further to provide spatial measurements, shape location and analysis, feature identification, and many other complex inspection tasks.

Many technologies and methods exist in the digital imaging world for the acquisition of 3-D images and information. Researchers in academia and industry are actively developing new techniques, and are improving on or commercializing existing, known methods. Some of the well-defined methods of acquiring 3-D information from images include:

- Stereo/multiple cameras

- Structured light line or

- “sheet of light”

- Multiple images with different lighting direction, color or shape

- Single- or multi-camera height from focus, shape, shading

- Light pattern projection

- (i.e. Microsoft Kinect)

Time of flight

Fringe projection with phase shift Interferometry

Some of these methods are used in computer imaging in the acquisition of 3-D scenes primarily for definition and reconstruction of an object. In some cases the technique is used for capture of motion in space (think of the Microsoft Kinect). Of the techniques mentioned above though, certain ones have gained wider usage in industrial automation, due in part to: 1) the nature of application requirements often found in machine vision projects, 2) reliability and robustness of the technique, and more critically, 3) the ease of implementation within the plant floor environment. The more common (for now) machine vision 3-D imaging techniques are stereo (or multiple) camera imaging, the use of structured light lines or patterns with single or multiple cameras, and time of flight sensors.

3-D Data for Machine Vision

In considering which technique(s) and components to use, it is important to understand the type of information that is available from a 3-D image and what would be most useful for the target application. In general, there are two types of 3-D image data that could be available in typical machine vision applications: single point location determination and point cloud image representation. (Note though, that these are not specifically linked to any of the imaging techniques mentioned above, and different techniques might produce either type of data depending upon implementation.)

| TECH TIPS |

|

Point Cloud

When we think about a 3-D scene, it is not too difficult to imagine that scene as a huge collection of individual points: a point cloud. Each point has an X, Y and Z position in space. A machine vision point cloud can also be referred to as a range map, because the height component of each point is usually relative to a specific plane, depending upon the imaging technique and components used. With a point cloud data representation, further processing is done to extract a single point in the scene (for example, the highest point above a plane); isolate features or objects based upon spatial height relative to a plane for measurement or quality evaluation; or search for and provide the location of objects in a complex scene based upon a stored 3-D model of the object’s shape, often for the purpose of robotic guidance.

Single Points

The data from a 3-D scene can be just the position of individual points related to specific features or objects. Often less computational expense is required to extract single point locations, resulting in faster processing. Position and height information from a single point can be used in an application, though multiple individual points often are processed to further define an object’s spatial orientation and size for location and/or quality inspection. With a single or small number of individual 3-D points, the matching of models or processing of a complex or confusing scene cannot be achieved as with a full point cloud.

How the data are used in machine vision is dependent upon the application. One important consideration is the scope of the information required by the application. Notice that in all cases, a single, unprocessed 3-D image point provides an X, Y, and Z position relative to a plane. When combined with other related points in the image, the data available to the application is a point (or points) with full 3-D planar representation: X, Y and Z position, and angles related to the yaW, Pitch and Rotation (W, P, R) of an object. (Also common is an X, Y and Z axis position along with the rotation of the object about the Z axis, sometimes referred to as 2 ½ D machine vision).

A clear understanding of application requirements for image content and the required data output is important in order to define the appropriate 3-D imaging techniques and components for the machine vision project.

3-D Imaging Techniques and Components

As mentioned earlier, a wide variety of imaging techniques exist that can provide 3-D information for a machine vision application. We will briefly review three of these techniques, the components used, and typical applications.

Stereo

Stereo imaging involves the use of two cameras to analyze a single view from different perspectives (much like human eyes). Through proper calibration of the cameras, the geometric relationship of the two different sensors is defined relative to a fixed world coordinate system. A relatively simple task with this imaging approach is to locate the same (or corresponding) target feature in each field of view and apply transforms to determine the position of that point in 3-D space. Real difficulties arise though when a feature is not well or uniquely defined in the two views. This is called a correspondence problem. Higher-level algorithms for stereo camera 3-D imaging use techniques like epipolar geometry to improve the results for point-to-point correspondence in an image. In this manner, stereo imaging can produce a 3-D point cloud of the scene, although the data might not be valid for every pixel in the image.

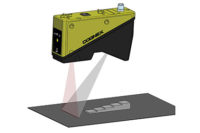

Structured light lines and projected patterns

In general, and as a simplified explanation, systems that use types of structured light are taking advantage of the geometric distortion of the known illumination pattern upon a complex 3-D surface. Most common in machine vision is the use of a projected line of light (also called “sheet of light”) onto a surface. A standard camera captures many images of just the light line as the object (or camera/illuminator) is in motion. With a properly calibrated system, the individual line images are combined to provide a full point cloud representation of the 3-D profile of the scene. In some cases, projected light patterns are used with stereo cameras to help overcome the correspondence problem. The known light patterns can be more recognizable than random or undefined features.

Time of flight

The basic concept of time of flight imaging is to project light onto an object, and measure the amount of time for the light to return to the sensor and thereby calculate the distance. These sensors are in wide use, but currently lack the spatial resolution both in position and distance to be appropriate for demanding machine vision tasks. Improvements to the technology are being implemented by some vendors.

Implementation Challenges

Machine vision using 3-D imaging can require significantly more effort in implementation and integration than 2-D imaging regardless of the technique. In particular, 3-D systems and components must be carefully set up and calibrated in order to obtain accurate data. There are some self-contained systems on the market that are pre-calibrated to a specific field of view (in the 3-D sense, a cube, not a flat square), and indeed these components are easier to use but come with some restrictions in exchange. As this is still an imaging task, lighting remains a key consideration. All of the usual precautions regarding the use of correct and adequate light for the application still apply, and possibly more so in that part and feature variation may be more pronounced when attempting to image an object that is expected to change in position and orientation.

Nonetheless, as with all machine vision, the better the available data, the better the potential for robust and reliable applications. As 3-D inspection techniques become more readily available, and familiar to the machine vision engineer, the value of the technology will be apparent for a growing subset of machine vision tasks.