Home » Keywords: » machine vision

Items Tagged with 'machine vision'

ARTICLES

Vision & Sensors | Machine Vision 101

There are 10+ considerations. Understand each and how they impact application performance.

Read More

New Product

Teledyne MicroCalibir Long Wave Infrared Compact Thermal Camera Core

April 24, 2024

Vision & Sensors | Vision

Integrated, Centrally-Managed Machine Vision for Built-In Quality

If we can bridge the confidence gap between underperforming legacy vision systems and manufacturers’ needs today, the rate of adoption is sure to grow exponentially.

April 2, 2024

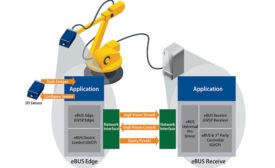

Systems Integration

Systems integration for machine vision solutions – Driving application success with current and future technologies

In the rapidly changing and expanding landscape of imaging hardware components and software solutions, the job of systems integration is as important as ever.

April 1, 2024

Quality in Automation | Robotics

Advanced Automation Delivers the Ultimate in Quality Control

When considering the type of robot for automating inspection tasks, cobots are often the initial go-to solution.

March 29, 2024

EVENTS

Industry Featured Event

5/1/24 to 5/2/24

Music City Center

201 Rep. John Lewis Way S

Nashville, TN

United States

The Quality Show South

Get our new eMagazine delivered to your inbox every month.

Stay in the know with Quality’s comprehensive coverage of the manufacturing and metrology industries.

SIGN UP TODAY!Copyright ©2024. All Rights Reserved BNP Media.

Design, CMS, Hosting & Web Development :: ePublishing