Vision & Sensors - Image Analysis

Counts

Using algorithms to extract quantitative information from an image.

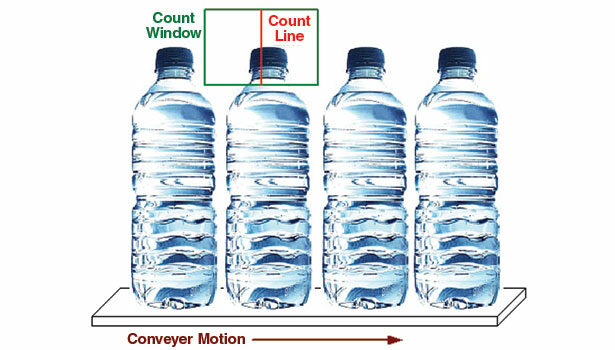

Counting Multiple Objects in Ordered or Random Position. Source: Teledyne DALA Industrial Products, Inc.

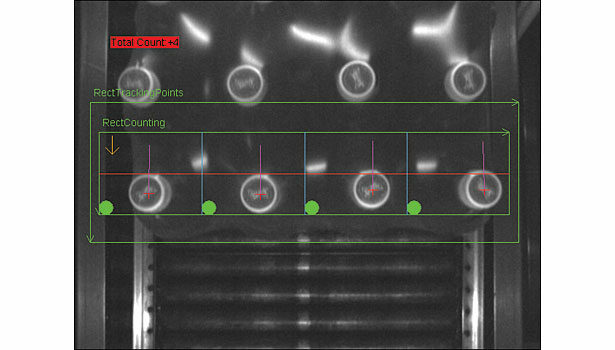

Caps are detected in the RectTrackingPoints image window and counted when they cross the Count Line (red line) in the four Count Windows (green and blue lines). Source: Teledyne DALA Industrial Products, Inc.

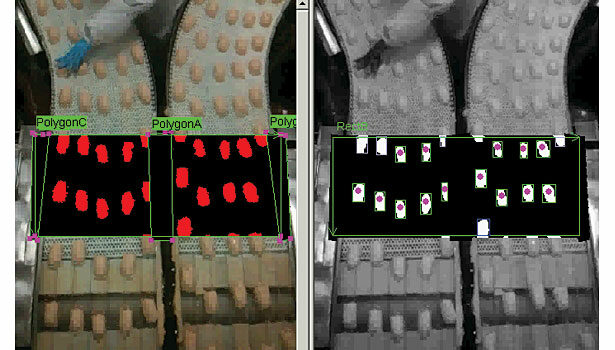

Patties are detected by their color (left) and then counted and tracked through the Count Window (right) using centroids as identifying features. Source: Teledyne DALA Industrial Products, Inc.

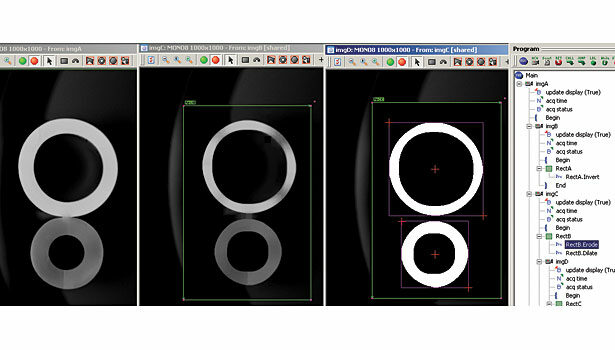

Touching rings (left) are separated by erosion (middle) and so measured as visually separate “blobs” (right), using machine vision and image analysis software (far right). Source: Teledyne DALA Industrial Products, Inc.

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!