“What’s trending?” is a phrase that has become ubiquitous in our social and business consciousness. A trend is a prevailing tendency that might (or might not) have long-term implications. What’s #trending on Twitter can be so fleeting that by the time you mention it someone will say “that’s so last hour’s news.” Alternately, something that was a trend may cease being “trendy” and become essential (e.g. computers, internet, smart phones, etc.). Trends can be based on statistical evidence (sales numbers, market results, YouTube views), molded by prevailing opinion, or even built by media coverage or marketing initiatives that create buzz around a product or idea.

For this discussion, “What’s trending?” in machine vision comes from personal observation—and opinion—of the market and the level of publicity apparent in recent media and marketing. To be clear, most trends in machine vision originate with competent and useful new or evolving technologies. However, in an engineering discipline like machine vision, a further consideration that seems appropriate is whether a trend is based on current practical use cases or on extreme forward-looking predictions of future capability that are not yet fully realized. For example: statements like “most homes will have a service robot” or “your consumer electronics will contain an embedded vision system for facial or gesture recognition” certainly may be true eventually but labelling these as current trends in robotics or vision is premature. The challenge for machine vision users and solutions providers, then, is to understand what specific applications can benefit now from a given trending technology, and to avoid the trends that are not viable in the short term or not a good fit over the long-term for their application(s).

Here are some often discussed, recent machine vision trends along with practical information that might impact selection and implementation of these technologies for machine vision in industrial automation.

3D Imaging for metrology and vision guided robotics (VGR)

The expansion of components for 3D imaging in the machine vision market is a strong trend that is being driven by high demand for 3D measurement and guidance, and by increased availability of cost-effective technologies that are part of 3D imaging systems. Part of the expansion is a proliferation of algorithmic capability for certain applications like 3D measurement (metrology), robotic guidance (VGR – vision guided robotics and related tasks like bin-picking or random object pick and place), and autonomous mobile robots (AMR) guidance and safety.

Three-dimensional imaging systems capture a view of a physical space and provide data that represent points in the scene containing depth as well as familiar 2D “planar” (x and y) locations. A few available components also provide a grayscale (contrast) or even a color image along with the 3D data. The fundamental benefit of 3D imaging is in providing a 3D location, but a further important benefit is that the 3D image is generally “contrast intolerant.” That is, the image information allows the software to process depth changes rather than changes in surface color features or shadows.

Three-dimensional imaging is a powerful technology for machine vision applications. It could easily be said that it is has moved from “trending” to a standard part of the machine vision toolbox.

3D imaging for robot guidance is driving new applications in a variety of industries including warehousing/distribution, and general manufacturing. Source: IDS/Ensenso

Practical implementation

Differentiation of 3D components can be difficult, and a detailed discussion of capabilities is outside of the scope of this discussion. However, the first step is to identify the type of application being targeted. Requirements for resolution, in depth as well as the horizontal X,Y plane need to be analyzed. While most systems offer generic capabilities as a whole, more components are offered which target and excel in specific tasks, specializing for example, in 3D measurement or in 3D bin picking. That said, beyond being able to deliver the appropriate precision metrics for the application, what drives the success of any 3D solution primarily is the software implementation and system integration.

Practical limitations

Integration of 3D imaging on the surface can be straightforward, but there are challenges in the details for specific applications. Some of these include:

While it might seem obvious, in applications where the object or the imaging system are in motion, error in the position of either might introduce measurement error that must be considered.

Most all 3D imaging system exhibit some level of “3D data drop-out,” a condition where shadowing of features relative to the active illumination and camera angles creates a void in the 3D information. Overcoming this situation is possible with some imaging techniques depending on the application.

Simply put, not all 3D machine vision applications are “ready for prime time.” For example, while applications in picking randomly oriented, homogeneous (all the same) objects or “bin picking” have been well solved and can be considered generically viable in many cases, picking heterogeneous and unknown objects, parcels, boxes, etc. remains a challenge for 3D imaging in many cases. Also, 3D reconstruction of an object or surface for measurement or differentiation can be challenging at production rates since many images may be required to fully model and analyze the part.

Non-visible imaging -infrared wavelengths

Wider availability and improved performance of imaging components to capture and create an image from non-visible light in infrared wavelengths is a trending capability that can positively impact a variety of machine vision applications. Part of this trend is the proliferation of LED illumination capable of creating light in various IR wavelengths. The use cases for this type of imaging are widespread, and identifying where and when IR imaging may benefit an application is dependent mostly on the object being imaged and the needs of the application.

Imaging of “near infrared” (NIR) wavelengths from about 700-1000nm has been used in machine vision for many years. Cameras to image “short-wave infrared” (SWIR) wavelengths from about 1000-2800nm also are not completely new, but advances in sensor technology recently have made these cameras more practical in automation applications. And finally, thermal imaging, or imaging of emitted IR wavelengths from about 7000-14000nm is now possible with small cameras (microbolometers) that are uncooled and well suited for automated inspection.

Practical implementation

Non-visible imaging has specific uses. NIR has been used to either eliminate worker distraction and discomfort from the high-power glare of machine vision lights, or to highlight features on specific parts where the IR light might respond in a different way to colors or certain materials. SWIR wavelengths are transmitted by some completely opaque materials (for example many plastics) and absorbed by some transparent ones (for example water) in a way very different from visible light wavelengths. Thermal imaging is the only solution when heat profiles must be tested in an automated environment.

Practical limitations

Overall, for non-visible imaging if the targeted wavelength provides the desired imaging result the technology is a good choice. However, bear in mind that the application base is a small subset of the use cases in the machine vision portfolio. Limitations could include:

It is difficult to always predict exactly how NIR or SWIR illumination will interact with the materials to be inspected. Testing of the application is recommended.

A common challenge in thermal imaging in automation is to develop a reliable baseline for the desired heat profile relative to the background temperature. Inspection for a “too hot” part for example must be done before the part has cooled due to heat dissipation, and that cooling must be consistent from part to part in an inspection process.

Costs are decreasing, but the SWIR and thermal components may be more expensive than visible imaging components.

Embedded imaging

The case for embedded imaging as a machine vision trend depends upon what seems to be a discrepancy in the marketplace as to the definition of the technology. One definition which classifies embedded vision as any device that combines image capture and processing is covers a very broad and perhaps overlapping segment of what also are traditional machine vision components. One might alternately constrain embedded vision to those devices that are completely integrated at a lower level (SOCs – systems on a chip, or SOMs – system on modules, or single-board computers) to be incorporated into a larger device. Easy to imagine use case examples might include self-driving automobile and AMRs or even smart phones. In machine vision, the term also could apply to an emerging trend where cameras contain embedded vision processing on-board to perform application-specific task in the camera, rather than on an external computer.

Practical implementation

Embedded vision—whether a SOM, SOC or camera with embedded processing—is characterized in one sense by the presence of a embedded vision processor; usually (but not exclusively) a GPU (graphics processing unit) or an FPGA (field programmable gate array). In either case, the processor is programmed for a specific task that is either a complete application or some image processing. In most implementations, an embedded vision processor requires low-level programming in order to configure or create applications. Devices for embedded vision have different interfaces to host systems than standard machine vision components, an important consideration for implementation.

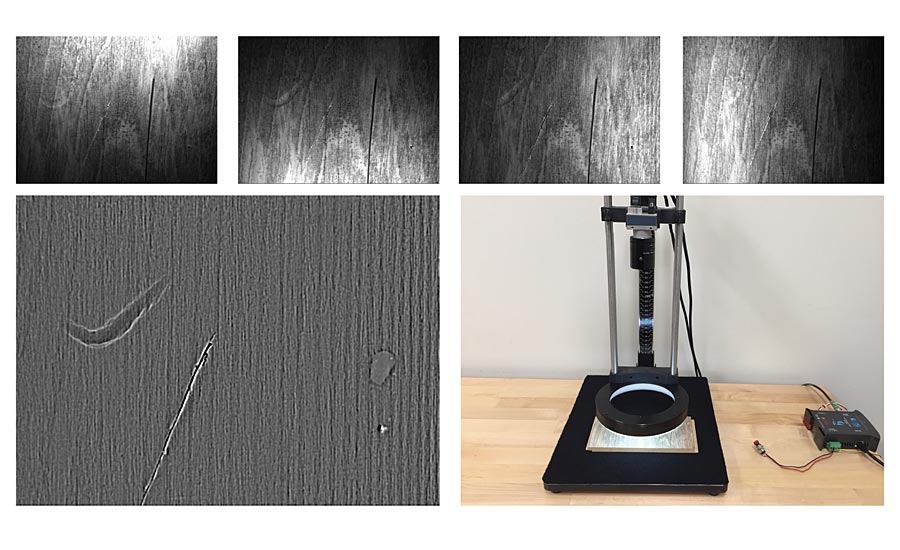

By combining images created with illumination directed at multiple angles relative an object surface, features not strongly visible in grayscale images might be more reliably visualized. In these photos, a 3D image produced from four grayscale images clearly shows defects in the board surface. Source: CCS America

Practical limitations

For general purpose machine vision, the use of low-level embedded vision in specific single-purpose applications likely is not practical as programming an embedded vision device remains more of a development than an integration task. Machine vision cameras with embedded processing may be more practical for certain applications. The immediate broader use case for embedded vision in machine vision might be in the implementation of cameras with “pre-programmed” embedded image processing applications—particularly AI or deep learning, and in commercial imaging targeted for very specific tasks.

Liquid lenses and high-resolution optics

Two apparent trending technology directions in machine vision optics are the proliferation of more advanced high-resolution and large-format camera lenses, and the move towards more seamless integration of component liquid lenses. The first trend is practical; as camera resolutions increase and pixel sizes decrease the demand for better optics is understood by and being met by many component lens suppliers. More product specifications include details of lens performance, for example charts showing the system’s modulation transfer function (MTF), a good measure for lens comparisons.

Liquid lenses are devices that can change focus depending on an external signal (usually a change in current or voltage) without requiring any mechanical change in the lens as in manually focusable lenses. This is not a new technology—liquid lenses have been used in smart sensors, smart cameras, and other machine vision devices for years. However, recent advances in the integration of these devices with machine vision optics and cameras is bringing the technology more into the realm of general-purpose use.

Practical implementation

For all machine vision applications, matching the lens to the resolution and physical requirements of the application is a required integration task. The availability of more lens selections provides better choices for the user and solution provider.

Liquid lenses are valuable in cases where the imaging distance may change from part to part in an application by enabling very fast focus changes dynamically and even with auto focus capability.

Practical limitations

Selection and differentiation of optical components can be difficult with the broad range of product offerings. It is important to evaluate the specifications and the characteristics of the component relative to the specific application.

Liquid lens technology can be difficult to implement, but some component cameras with embedded processing are becoming available that automatically control liquid lenses. Some add-on liquid lens components may limit the sensor coverage of the associated lens (not so with integrated liquid lens systems). Be aware too that focus changes will impact calibration, so liquid lenses may not be suited for applications requiring precise calibration.

Advanced lighting techniques and processing

Trending in illumination components for machine vision is the availability of controllable, multi-spectral devices that enable more flexibility and advanced capability in certain imaging situations. By changing monochromatic colors, one might be better able to overcome part family variations without multiple lighting devices, or even create a color image using multiple images of different illumination colors. High-rate imaging of multiple views using different lighting angles could be used to create 3D representations of an object or to provide a high dynamic range (HDR) image.

AI and Deep Learning

Finally, let’s tackle what is arguably the most hyped machine vision trend in decades: AI, machine learning, and deep learning. AI, or artificial intelligence, is a branch of computer science dealing with ways computers can imitate human behavior. As a discipline, AI has been around since about the middle of the last century. AI doesn’t describe any particular technique, only the goal; any programming logic might be called AI, even simple if-then rules and decision trees. Machine vision search algorithms have been called artificial intelligence.

Machine learning is a subset of AI, and deep learning is a subset of machine learning. These terms are designations for techniques that realize AI through algorithms that learn. Machine learning is a general concept related to systems which, based on initial data input, can learn and improve their performance in a given task.

Deep learning is machine learning that uses “deep” neural networks to allow computers to learn essentially “by example.” The technique has been shown to excel in tasks such as image recognition, sound recognition, and language processing. Deep learning is highly computing intensive and usually requires special processor hardware (for example GPUs, graphical processing units with deep learning cores), particularly during the learning process. The software and hardware platforms available to execute deep learning in machine vision have expanded rapidly within the past few years.

Deep learning without question will have a long lasting impact on machine vision with the following practical implications in current general purpose products.

Practical implementation

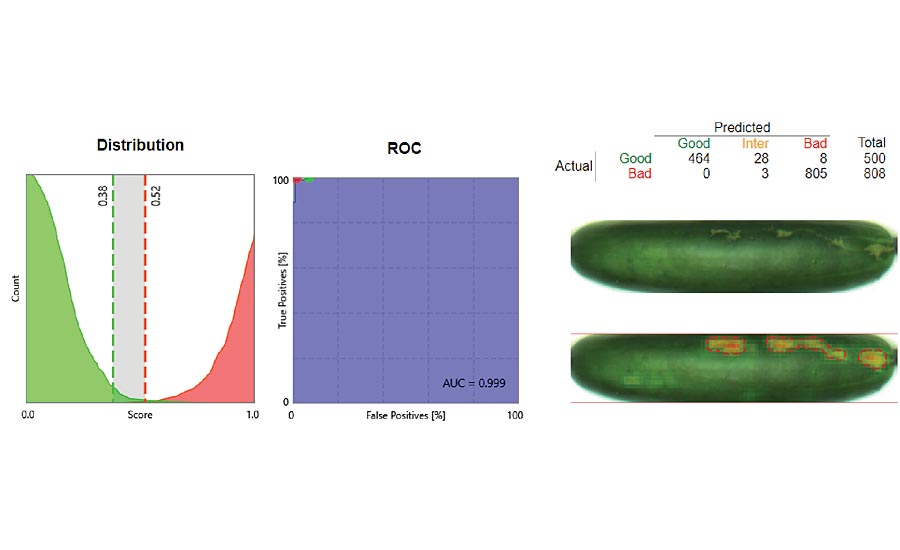

Deep learning for machine vision is fundamentally easy to implement when using readily available packaged software and/or hardware systems. The learning process (at minimum) requires human training of images to “classify” good vs. bad product. In some very simple applications, the classification set might be small and achieve training success. In many applications, the classification requires multiple image sets with each class containing many example images. Results often are provided as a “confidence” level that the target image contains classified feature (good or bad).

Deep learning in machine vision works best in applications where good parts and parts with defects are well classified in the learning process. At the time of inspection, a well configured deep learning system will be able to infer defects even if those features are not identical to the trained defects. Source: Integro Technologies

The use of deep learning goes beyond simple inspection, and it is beginning to be used in a supporting role in many areas related to machine vision including robotics and safety.

Practical limitations

Deep learning is a technology that has the dubious honor of being at the peak of “inflated expectations” in the “Gartner Hype Cycle” (www.gartner.com) curve for three years in a row. That observation is not intended to be controversial or critical but is meant rather to highlight the need for diligent evaluation of this potentially useful machine vision technology when under consideration for use in your applications.

Despite the buzz, one must understand the potential limitations of the technology, which include:

First and foremost, image formation requirements are the same for deep learning applications as for standard machine vision. The image to be processed must successfully show all the features or defects that must be classified and inspected. Deep learning cannot overcome bad lighting and/or optical design (but will it eventually help with design?).

Deep learning is not a solution for all machine vision applications. It does well in situations where subjective decisions need to be made like a human inspector. In these cases, a good rule of thumb is that if a human could easily and quickly be trained on how to differentiate good from bad parts, deep learning likely could be also. It is not useful in applications that require confirmation of metrics, as in measurement or location.

Training may require a larger sample set and more human intervention than expected.

The results may not be 100% reliable on startup. Depending on the application, deep learning may initially find a low percentage of targeted defects or classified features, and further training on more images may be required to achieve near 100% reliability.

The “trends” that are not “trendy”

In conclusion, it should be clear that emerging technologies in machine vision create trends that are well worth discussing and updating on a regular basis. However, we should maintain a solid perspective regarding the fundamental components in machine vision that continue to enable more productive manufacturing.

Not all machine vision applications call for implementation of the latest trending components. Certainly, these will enhance and expand the application of machine vision in specific areas. Just bear in mind that the “non trendy” technologies continue to advance in capability and use cases. Keep the new technologies in your toolkit, but don’t overlook using traditional machine vision in those many cases where the basic technology will serve your application well. V&S