In 1969, I had a microphone perched next to the radio, prepared to record each Beatles song played, just to satisfy my obsession at the time. What resulted on my old reel-to-reel player was a series of songs missing the first five seconds of each. Today, I can play any song I want anytime I desire, and through streaming services I can be entertained endlessly by software that determines my preferences for me, and tees them up one after another. With another app, I can have my favorite sandwich delivered as I listen. The world has come a very long way.

Indeed, we are long past the days of wanting to throw our desktop computers through a window, but at the same time, we continue to license software products that fail to make our lives, or our jobs, any easier. When a software company discovers a way to disrupt an existing process, adhering to quality standards, or the voice of the customer, is not always a primary consideration. If you are in a position to advocate for software that will drive quality efforts, this puts you in a precarious position, because if staff do not embrace the solution, you’ve just wasted a lot of time, money, and personal credibility.

One way to evaluate the quality of software is through the application of “human factors.” Human factors are the study of how people respond (both physically and psychologically) to environments in general, and commercial products in particular. Addressing human factors in product design demands attention to our capabilities and limitations, seeking to make the human-product interface efficient, effective and ideally, a harmonious partnership.

Although the term “human factors” may be new to some readers, its origin as a field of study can be traced back to Greece in the 5th century BC, where Hippocrates II laid out in detail the optimal configuration of a surgery suite and its various instruments. Today, human factors consultants assist organizations to evaluate products and optimize work environments to improve quality and productivity, while reducing fatigue.

The domain of human factors is huge when considering all the situations, interactions and consequences where it can have an effect. A more narrow application within manufacturing settings is usability engineering, a field that focuses largely on human-computer interaction and can lead to devising interfaces that are usable, efficient and even elegant in design. “It is important to differentiate utility versus usability,” notes Wrae Hill MSc, RRT, FCSRT, a human factors consultant to Interior Health in British Columbia. “Utility measures whether the program works as intended from a technical standpoint, whereas usability measures a potentially naïve user’s response to the system.”

Given its importance to producing quality outcomes, we wanted to explore usability engineering as applied to the design of software to support improvement initiatives run by quality departments. Good software would not only be competent to performing necessary tasks, it would be embraced by stakeholders as the right solution for right now. As proof of concept, we studied an emerging software category destined to disrupt how companies approach the process of quality improvement. The application fits the category now known as “project portfolio management” aka PPM. We’ve long used portfolio management platforms to manage our own personal finances and brokerage accounts, and so it makes sense to apply the same discipline to the determination of which projects to resource and fund in the corporations we work within. And so the question is: can a PPM make our job easier? And is the solution scalable? Simply put, to be scalable, it must be useable.

It is critical that users of software feel supported by the tool, or usability suffers. The best PPM platforms will offer tools, training and other support features directly within the platform.

The PPM space has enjoyed a lot of attention since Forrester Research first suggested that “a comprehensive PPM tool investment is likely to provide an ROI of 250% or more” by helping companies to complete more improvement projects, more projects faster, and projects with stronger results. Since this study was published in 2009, Deloitte, E&Y, PMI, and Forbes have all published white papers celebrating the benefits of a PPM platform. Gartner recently predicted that by 2023, 80% of companies will have an enterprise project, program or product management office in place, adding to the $3.1 billion already spent on PPM solutions. But it’s one thing to entrust results to a white paper’s forecast, it’s another thing to verify in advance that your staff will embrace the solution and employ it to realizing the advertised benefits. This is where understanding the product’s attention to human factors and usability engineering play an essential role.

One quick litmus test of a product’s usability is to simply look at the training offered or recommended by the vendor. “Training is the last bastion of poor design,” according to Alphonse Chapanis, an American pioneer in the field of industrial design, widely considered one of the modern authorities on ergonomics and human factors. Accordingly if a lot of training is required to be proficient in the use of the application, this should serve as a red flag for potential customers. Among the PPM software products we studied, one popular solution recommended a nine-hour tutorial and potential two-day course; a close competitor required only three hours to train in all features and functions because the tool has directional guidance directly within the system.

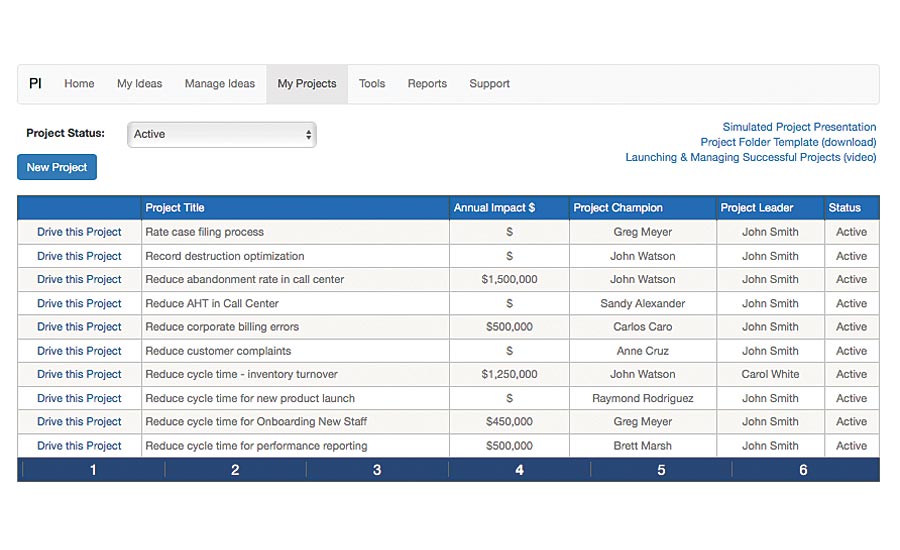

The best ideas in a PPM will advance to become live projects. Individual project leaders are guided through the demands of the work, while able to view other projects in which they play a supporting role. *Click the image for a larger version

Another useful resource for comparison of product quality is to seek out one of the many review sites now in place to guide product procurement. If the product recommended to you does not have any published reviews on G2, Capterra (a division of Gartner Research), or other popular review sites, then this is yet another red flag. These review sites go to great lengths to verify the veracity of reviews and their sources, and can play an important role in your due diligence. If reviews are poor, then you have significant cause to take pause. For our study, we looked only at products with a minimum of 50 published reviews.

For the most thorough and objective analysis possible, we turned to the System Usability Scale (SUS), developed originally by an engineer at Digital Equipment Company in 1986 as a tool to evaluate computer systems in general, and later extended to other product categories. This takes the form of a brief survey completed by customers; a Likert scale is used for establishing results and potential comparison to products within the same category. The tool has now been used on over 275 product evaluations, and is compatible with the more recent requirements of ISO standard 9241, Part 11, which requires evaluation of a tool within the context of its use and including such parameters as effectiveness, efficiency and satisfaction.

System Usability Scale (“SUS”)

- I think I would like to use this product frequently

- I found the product unnecessarily complex

- I thought the product was easy to use

- I think that I would need the support of a technical person to be able to use the product

- I found the various functions of the product were well integrated

- I thought there was too much inconsistency in this product

- I imagine that most people would learn to use this product very quickly

- I found the product very awkward to use

- I felt very confident using the product

- I needed to learn a lot of things before I could get going with this product

Reprinted from Bangor, Kortum and Miller, “Determining what Individual SUS Scores Mean: Adding an Adjective Rating Scale,” Journal of Usability Studies, Pp. 114-123, Vol 4, Issue 3, May 2009

In order to include what we believed was an important dimension to the study without extending the length, we added the question “I think this product will help me contribute to my company’s improvement goals” by eliminating “I found the product very awkward to use,” a question we felt an answer could be inferred by many of the other nine questions in the survey. The survey design made it a simple matter of adding the scores that aligned the highest level of satisfaction with a score of 5, for a total possible score of 50. We collected over 100 surveys across five products.

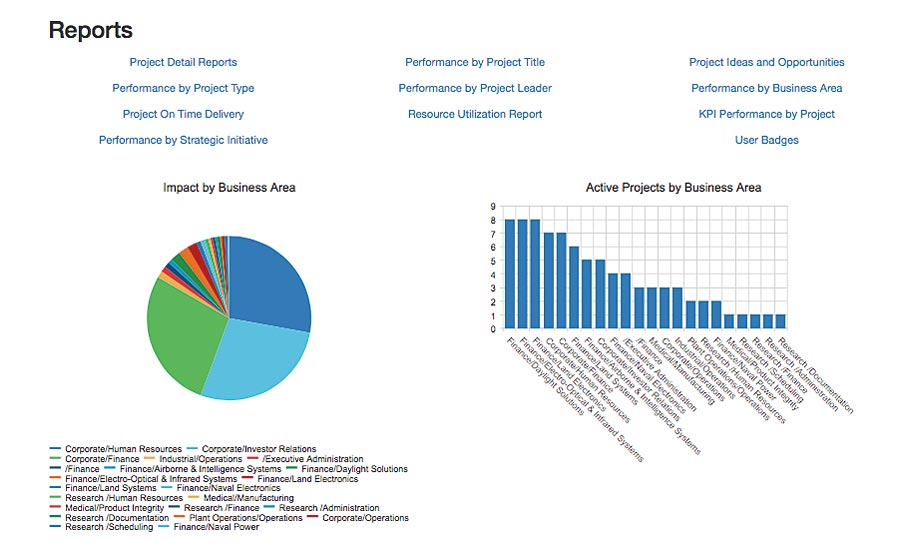

The PPM becomes the “single source of truth” for all quality improvement activities in the organization. *Click the image for a larger version

Across the products studied we found a response range of 30-37, with the strongest product scoring 3.96 on the question “I think this product will help me contribute to my company’s improvement goals.” We would consider a score of 3.0 on any single question to be a fairly neutral, “I’ll believe it when I see it” type of response, with scores closest to 4 representing a sense of bona fide confidence (we’ve already found from previous studies of customer satisfaction that no matter how much a customer values a product, they will never give it a 5/5 score). As an added form of validation, we found a correlation between high SUS scores for specific products, and relatively high end-user evaluations on published review sites. Are PPM systems scalable? Certainly, there is strong evidence to suggest they are.

When it comes to casting your vote for any software procurement opportunity, it remains important to bring objective, potentially measurable metrics to the conversation, and the SUS, combined with evaluation of published reviews and training requirements can be an important part of your due diligence. Hill recommends also exposing the product to a wide range of real world end users of different ages and backgrounds, and being wary of claims of usability that are not evident from product demonstrations that you witness. “At the end of the day,” he reminds us, “it is the end user who must be the judge of good usability.”