Machine vision solutions help advance quality by recognizing defects at more efficient rates than humans can, making for more consistent and faster production cycles. They also provide human operators with more information to help them make important adjustments to their production lines.

Alex Shikany, vice president of membership and business intelligence at A3, says that vision technology has evolved to the point where there are a wide variety of solutions available for virtually any application.

“New applications and industries are adopting more machine vision technology with each passing year,” Shikany says.

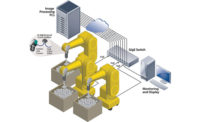

Machine vision is an automation technology that spans inspection and on-line metrology to robotic guidance. In quality implementations, machine vision is typically used to detect non-conforming parts, assemblies, or products to ensure that defective parts are captured early.

“This fundamental function improves quality by helping manufacturers deliver high-quality end products and can also identify problems early in the process to help avoid adding value to an out-of-tolerance sub-component,” says David L. Dechow, principal vision systems architect, Integro Technologies.

“Expanding on these capabilities, machine vision becomes an integral part of Industry 4.0 relative to smart manufacturing and big data,” Dechow says. “Machine vision systems not only capture and filter the final products for quality, but also deliver data that can be directly used in tuning a process to help make sure that less non-conforming product is produced in the first place.”

Dechow cites an old machine vision axiom that one might be able to inspect quality into a process, but the technology is best utilized when the data it provides helps to make sure quality is a part of production as a whole.

Rich Dickerson, marketing manager at JAI, agrees.

“Even the most ‘quality-by-design’ manufacturing process ultimately requires some level of inspection—or multiple inspections—to make sure that quality targets are met,” Dickerson says. “Machine vision performs the same role as traditional human quality control inspectors, but in most cases can handle a higher volume and detect a higher percentage of defects than its human counterparts.

Because it can conduct countless inspections without variation, machine vision provides more consistency compared to human inspectors. It also minimizes inconsistencies that can result from differences in judgement from person to person, Dickerson says.

The right machine vision solution is completely application-driven.

It’s also more sophisticated. Dickerson says that it can perform highly complex inspections involving microscopic details, precise shape or color matching, even multispectral analysis if needed to detect certain defects.

“While it is possible for human inspectors to perform very complex inspections, the number of tools and training required typically limits human inspections to simpler tasks,” Dickerson says.

It also shines when it comes to throughput. Machine vision systems leverage high-speed interfaces and computers to perform highly complex inspections much faster than is humanly possible. Human errors increase with speed, while machine vision can maintain the same zero-defect performance at very high speeds.

The right machine vision solution is completely application-driven, says Micropsi Industries CEO Ronnie Vuine.

“Look at the application, find the most robust solution that does the job with the lowest total cost of ownership,” Vuine advises.

The most important path to a successful system is to first focus on and evaluate the needs of the production that can benefit from quality improvement, Dechow says.

“Once the system requirements are identified, design of a solution requires knowledge of the available technologies and experience in the implementation of a broad range of components and software,” he says. “The difficulty in executing this design can vary from the task of simple product selection to complex imaging and analysis system integration.”

Dickerson agrees.

“Depending on exactly what the system needs to do will enable you to determine if there is an off-the-shelf solution available or if a more custom solution needs to be built, either by your own vision team or by a system integration partner,” he says. “If it becomes a project for your own team, then your requirements document must evolve to an even more detailed specification for evaluating various vision components such as cameras, lenses, lighting, motion control, and software development environments.”

Shikany recommends working with trusted system integrators, who can develop end-to-end, turnkey machine vision solutions and can help with just about any application.

In addition to manufacturing, machine vision is increasingly used in applications outside the factory, Shikany says.

“We’re seeing it used to monitor human labor to prevent injuries, such as in logistics,” he says. “We’re also seeing increased deployment of embedded or computer vision technologies—low power consumption, smaller form factor imagers—that can be implemented in a variety of OEM products. The role of software is also expanding to make sense of growing data sets.”

Dickerson says the growing use of artificial intelligence (AI), the proliferation of robot-based systems, and the incorporation of multispectral imaging are emerging trends.

“The use of AI software, which allows machine vision systems to learn for themselves how to identify certain types of defects, has enabled machine vision systems to successfully tackle some applications where subtle or unpredictable variations make it extremely difficult to write the explicit rules needed for traditional machine vision systems,” he says.

The combining of machine vision with robotics has enabled robots to move away from their fixed-position origins, to provide a dramatically more flexible set of capabilities for everything from traditional tasks like bin-picking or pick-and-place, to a wide range of new applications such as autonomous robots that move materials around factory floors, agricultural robots that pick weeds or harvest fruit, and much more.

“And finally, where ultraviolet or near infrared imaging was once relegated to a few highly specialized tasks, we are now seeing the use of non-visible spectral bands, as well as specific wavebands in the visible spectrum, to provide added capabilities for a wide range of inspections from food sorting/grading, to bottling or package inspection, to electronics and wafer inspection,” among other applications, Dickerson says.

Vuine says that machine vision is becoming much more popular and streamlined in recent years.

“People are moving away from complicated optical setups and towards very basic cameras,” he says. “Human environments have been designed to make sense to humans, visually, so cameras that perceive the visible part of the light spectrum and don’t do much else are best to pick up what’s going on in such environments. So, algorithms become more important than optical hardware, and cameras become more interchangeable.”