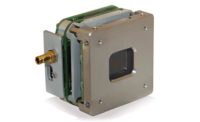

Teledyne DALSA’s “BOA” smart camera is a 44 mm cube, uses 2 to 3 watts and runs standard machine vision applications. Source: Teledyne DALSA

The monochrome (gray scale) camera line of force is towards faster cameras and interfaces, and larger sensors, if sensor pixel defects can be eliminated. Some machine vision tasks, such as location or defect detection, could benefit from color or 3-D information, but monochrome cameras are used because of their low cost and higher resolution. The use of color and 3-D will continue to grow and new technology and markets will accelerate this growth.

Currently, most color machine vision cameras use colored filters over camera pixels. This is inexpensive but leads to errors when making color measurements. I think there will be a new color camera technology that will give us much better accuracy, at a competitive price, perhaps using programmable filters to sequentially take red, green and blue images. Perhaps this would be a substantial cost reduction in multi-layer sensors. The result would be a much larger market share for color machine vision systems.

Most 3-D vision systems now use triangulation or perspective (the change in object size with distance) to measure 3-D. I predict a disruptive technology that makes 3-D precise and inexpensive enough so it could be used on many more 3-D tasks. One possibility is a “plenoptic camera” [1] that determines the direction of light rays from an object and, hence, its position in space.

I expect major growth in 3-D and color vision systems for new machine vision tasks, such as collision warning in automobiles, interactive gaming [2] and security. These vision systems must operate in uncontrolled environments and have low cost and guaranteed speed-it isn’t helpful to be alerted after you drive over someone. Later, I’ll speculate about these new, often consumer-oriented, machine vision applications.

Getting Smart

“Smart” cameras move vision processing into the camera and return results rather than, or in addition to, images. Smart cameras must use low-power processors and the development of these processors is driven by huge consumer demand for mobile computing devices, such as tablet computers.The smart camera line of force is very strong and it is easy to predict that they will get smarter and take a larger share of the machine vision market. I predict that smart cameras will soon account for more than half the number of machine vision systems sold.

Smart cameras will be the tool of choice for routine machine vision tasks, such as finding and measuring parts, but many vision applications, such as some types of defect detection, require more computational power. These applications are, and will be, solved by “boxed” vision systems; typically with multi-core x86 class processors. Custom hardware will continue to be used on demanding applications such as web inspection or high-speed tracking.

Software Futures

Windows is currently the operating system of choice for machine vision systems, but the Linux line of force is growing stronger. Linux variants are used in most mobile computers and so, again, consumer demand is driving the adoption of Linux.The user interface is a critical part of a machine vision system. To the user, the interface is the machine. We are committed to making smart, easy-to-use user interfaces because that sells machine vision. I see this line of force continuing to be strong with old and new ideas about interface design complementing each other. 3-D “human-machine interfaces” will allow for things such as “picking up” a vision (optical) caliper and place it on a part by moving your hands. A 3-D vision system to program the vision system! This would be useful in wash-down environments, such as a food processing plant.

Algorithms

Algorithms are mainly software, but include the vision system’s lighting, camera optics and computational abilities of the hardware. For example, 3-D algorithms often use a pattern of lighting (“structured light”) and some have special hardware for computing depth. I predict improvements in algorithms in two general areas.First, we need “smarter” algorithms, that is, algorithms that understand more of the visual world and use that knowledge to deal with larger variations in product appearance. Right now, a major part of any machine vision system is getting the lighting and angle of view “just right,” so a more human-like algorithm that could “compensate” for lighting or part variations would be extremely useful.

Second, we need “kinder” algorithms, that is, ones that are easier for a human to use. While most vision system operations are currently very easy to use, more sophisticated vision operations often require substantial knowledge and parameter “tweaking” to use. Wouldn’t it be nice to show the vision system good and bad parts and say, “Here, you figure it out!”?

As an example, consider a low-contrast scratch on a noisy background; say a scratch on etched metal. We can easily see this scratch because we know what a scratch looks like-it consists of somewhat connected somewhat brighter spots and, from the physical process of scratching, we can usually assume that the scratch curves rather than having sharp angles of direction change. Humans do this without apparent effort but it is difficult to tell a vision system how to do this.

I predict that new ideas from universities and industry will make smarter and kinder algorithms for specific vision tasks, such as learning about and detecting scratches on parts. I don’t think we can build a general purpose vision system able to replace human vision in any application, as we don’t know how and it probably wouldn’t be cost effective. Someday we might be able to grow a vision “brain” [4]-but then it might take years to train it.

Machine Vision Out of Control

Traditional machine vision markets-metrology, defect detection, tracking, bar-code reading and OCR / OCV-are still growing, but at a pace reflecting the maturity of the technology and its users. Current machine vision algorithms depend on controlled part presentation, lighting and optics for robust and fast processing. I’ve been looking at new machine vision applications that work in mostly uncontrolled environments.

What could make these applications possible is that they are limited to specific vision tasks. For collision detection, the vision system has to report something in the vehicle’s path, not what the “something” is. The challenge is reliably dealing with uncontrolled lighting (e.g., oncoming headlights), multiple and moving “somethings.”

Here are some serious and fanciful examples of applications, mostly for consumers, that are likely in the next few years:

Vision for automotive safety is used in high-end Volvos and Mercedes, and will migrate “down market.” Back-up cameras will soon be mandated and that will help reduce the cost of adding collision detection. Despite successful autonomous vehicle demonstrations, I think fully autonomous vehicles are well beyond my five-year window.

The robot stocker. Let this robot loose in your warehouse and it learns the locations of product bins (by barcodes or RFID) and then can restock the bins from delivered stores.

The room painting app. Download this app to your smart phone and then take a few pictures of a room you want to repaint. Pick a color and the app “paints” only the walls – not the furniture or the cat-because it “understands” what a wall is. See how the room would look with different lighting and paint combinations. This could save marriages.

Some sorting of recycled material is done by machine vision, and I expect this to increase to include sorting of “mixed waste.”

There is lots of research on agricultural vision for pickers, graders and sorters to reduce cost and increase quality. So how about a lawn-grooming robot that picks up sticks and squirts herbicide on dandelions and other undesirables in your lawn? I’m not sure how it would deal with contributions from neighborhood dogs.

Now if you can tell me which will be commercially successful, I’ll buy lunch when we meet in five years.

What could make these applications possible is that they are limited to specific vision tasks. For collision detection, the vision system has to report something in the vehicle’s path, not what the “something” is. The challenge is reliably dealing with uncontrolled lighting (e.g., oncoming headlights), multiple and moving “somethings.”

Here are some serious and fanciful examples of applications, mostly for consumers, that are likely in the next few years:

Vision for automotive safety is used in high-end Volvos and Mercedes, and will migrate “down market.” Back-up cameras will soon be mandated and that will help reduce the cost of adding collision detection. Despite successful autonomous vehicle demonstrations, I think fully autonomous vehicles are well beyond my five-year window.

The robot stocker. Let this robot loose in your warehouse and it learns the locations of product bins (by barcodes or RFID) and then can restock the bins from delivered stores.

The room painting app. Download this app to your smart phone and then take a few pictures of a room you want to repaint. Pick a color and the app “paints” only the walls – not the furniture or the cat-because it “understands” what a wall is. See how the room would look with different lighting and paint combinations. This could save marriages.

Some sorting of recycled material is done by machine vision, and I expect this to increase to include sorting of “mixed waste.”

There is lots of research on agricultural vision for pickers, graders and sorters to reduce cost and increase quality. So how about a lawn-grooming robot that picks up sticks and squirts herbicide on dandelions and other undesirables in your lawn? I’m not sure how it would deal with contributions from neighborhood dogs.

Now if you can tell me which will be commercially successful, I’ll buy lunch when we meet in five years.

V&S

References

[1] http://en.wikipedia.org/wiki/Plenoptic_camera[2] http://en.wikipedia.org/wiki/Kinect

[3] http://en.wikipedia.org/wiki/Fitts’s_law

[4] http://en.wikipedia.org/wiki/Synthetic_biology

Tech Tips

Major growth in 3-D and color vision systems for new machine vision tasks.Smart cameras will be the tool of choice for routine machine vision tasks.

3-D “human-machine interfaces.”

Smarter and kinder algorithms for specific vision tasks, such as learning about and detecting scratches on parts.