Increasing pressures such as supply chain disruptions, accelerating product cycles, the greying workforce, and increasing consumer demand for complex electronic products mean that manufacturers must do more with less... and do it faster. Manufacturing quality teams are put in a tough position; they must improve quality while maintaining or increasing production volumes.

The truth is, quality teams are working with tools that were not designed to handle the speed and complexity of today’s manufacturing environment. In order to affect critical quality metrics like scrap, rework, or First Time Yield, they need a new method to manage quality. Not only that, but this new method must make enough of an impact to offset the cost of implementing it.

What’s the problem with quality management today?

The methods used to manage quality today were largely introduced in the 20th century. Incremental improvements have been made in areas such as the improved application of statistical process control (SPC), vision inspection, and automation, but the foundation of quality management is still the same.

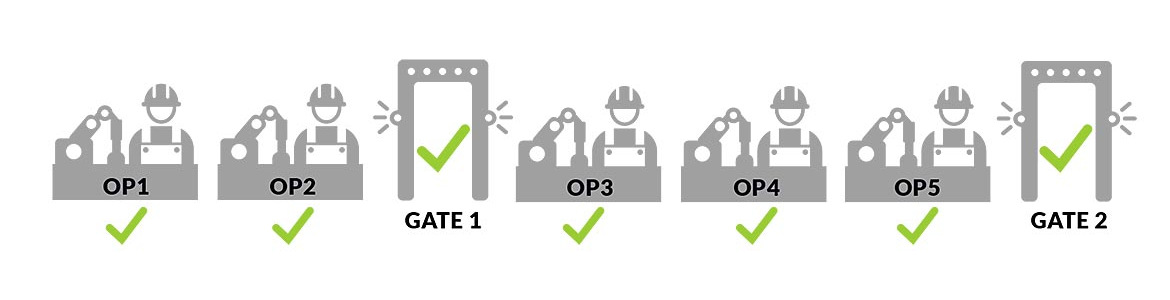

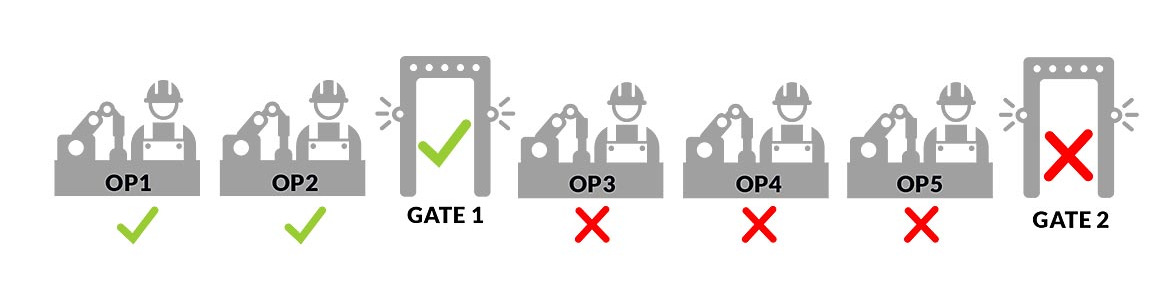

What is that foundation? Quality is managed primarily by detecting defects. As products move along the manufacturing line towards their final form, they are measured for quality and performance at quality gates. Quality gates are inspections, measurements, or tests. End-of-line testing is the final quality gate at the end of a process, ensuring that no bad products get shipped. Other quality gates may occur between operations in a process, such as visual inspection. Quality gates can be costly for manufacturers to implement, and can slow down the process. By the time a product hits a quality gate it may be too late to salvage, and it ends up in the scrap pile.

Between quality gates are the operations of the manufacturing line, which are usually monitored with SPC. Since SPC analysis is siloed at each operation and is disconnected from data gathered at quality gates, it can only provide a limited view of what could be causing defects. SPC has great intentions, but it is not always implemented as intended. When control limits are exceeded, quality engineers often take a measured risk to continue production to meet throughput targets, since it isn’t always clear when or how exceeding limits will result in a major quality problem.

When a large enough quality problem is detected, root cause analysis (RCA) takes place to uncover the cause of the issue. In theory, this analysis is meant to be used to inform process improvements. However, these investigations can be so time-consuming and costly that they are often avoided in all but the most serious quality issues. Since RCA takes so long and can even be inconclusive, the learnings from them often fail to help improve the process and prevent future defects.

The shift left in quality management

To sum it up, there is a lot of waste happening in the way quality is managed:

- Defects are often discovered long after they occur, wasting power and materials

- Defects detected too late result in unnecessary scrap and rework

- Data is being collected and stored from testing and process but it isn’t fully used

- Resources are put toward root cause analysis but it doesn’t always improve the process

A new method to manage quality must not only offer improved value in the form of improving quality, but it must address these areas of waste. It stands to reason that if we could detect defects even earlier in the process, which would solve some of these problems. Given that increasing the number of quality gates is untenable, what else can we do?

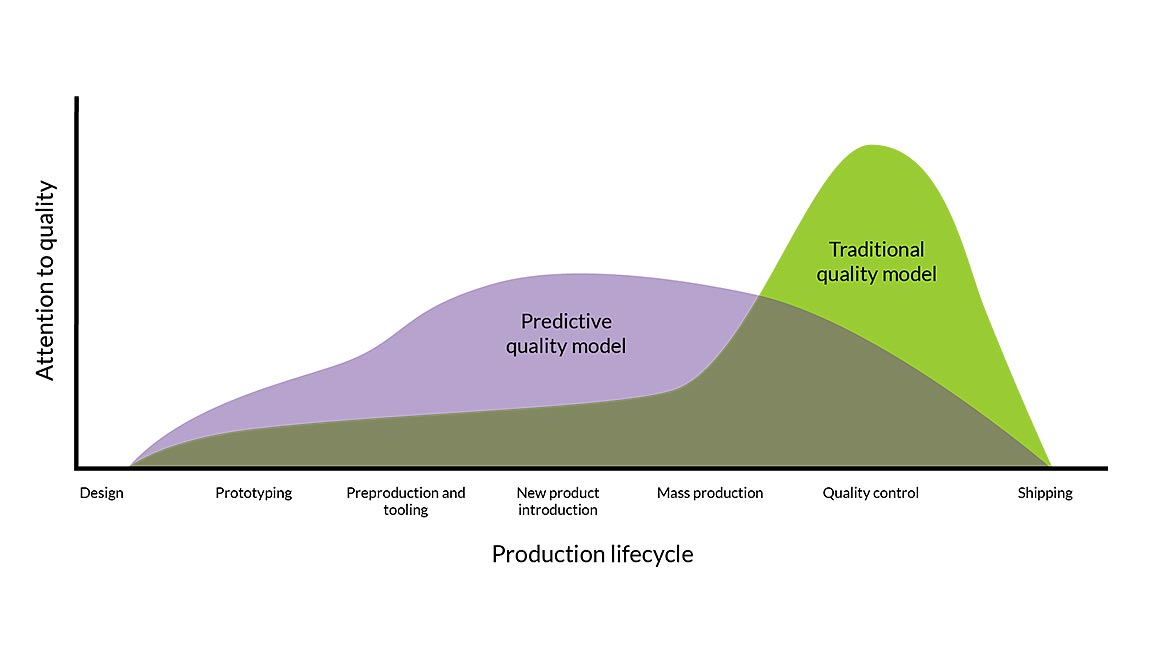

To inspire a new model of quality management, we can look to software development for inspiration. “Shifting left” in software refers to the practice of moving resources spent on testing and quality control from the end of the process towards the middle or beginning of development. Instead of firm quality gates at the end of a development stage, quality is monitored in an ongoing manner as part of regular operations.

We can use this mental model to understand the differences between a traditional approach to quality in manufacturing, and the new paradigm, called predictive quality. With predictive quality, quality management happens in real time during the manufacturing operations, not after it.

By using manufacturing process and test data, a predictive quality tool can understand complex behavior of signals on the manufacturing line, and their relationships between quality gate (test/inspection) data. Then, it can form predictions based on what is happening on the line in real time. To put it simply, manufacturers will be able to see defects coming and intervene even before they happen. If a defect still managed to occur, the solution could also leverage the process data to run an automated root cause analysis report, reducing the time spent solving an issue by hours or days.

What makes Predictive Quality possible?

Predictive quality might seem too good to be true. It would be, were we not in the midst of Industry 4.0 and the digital transformation of the manufacturing industry. There are three critical Industry 4.0 technologies which, when combined, can bring the dream of predictive quality to life: data collection, artificial intelligence, and cloud computing.

Data collection is now integrated into every new operation on the shop floor. A large volume of data is available, and it is getting more granular as time goes on. The big data of manufacturing presents both an opportunity and an obstacle. We have the opportunity to mine these large volumes of data for insights, but current methods of analysis cannot handle such large volumes of data at the speed required to make an impact on production.

Fortunately, artificial intelligence (AI) has matured in recent years, and AI has the ability to process data at lightning speeds. Artificial intelligence is the backbone of predictive quality, and the solution to make it operational.

The third technological factor supporting predictive quality is the adoption of cloud computing. Cloud computing provides a cost-effective, scalable and flexible infrastructure on which to process and analyze the vast amounts of data with AI.

In order to offer practical value, a predictive quality solution needs to be actively integrated into the shop floor ecosystem. It must react quickly, to address the timely nature of manufacturing production. The platform needs to be user-friendly and be able to translate complex AI algorithms into understandable language for engineers and managers.

A predictive quality solution empowers engineers with advanced processing power and accurate insights. It collects, compiles, sorts and analyzes data, runs reports, and performs root-cause analysis investigations, saving countless hours previously spent on routine tasks. Not only does predictive quality reduce scrap and rework, but it makes the job of production and quality staff easier.

How defects are predicted

So how does a predictive quality solution predict defects? It uses machine learning models and anomaly detection. Manufacturing data (commonly signal or measurement data) is ingested into the solution to train machine learning models. This means that the “normal” conditions of the data are known by the solution. The solution then monitors these signals and alerts engineers when it detects an anomaly, or abnormal behavior of the signal.

Signals can be monitored in isolation, or a group of signals with a suspected relationship can be monitored together. All manufacturing data is available from the same platform, including SPC charts. Although anomaly detection offers more flexibility and accuracy than SPC in many cases, SPC and anomaly detection both have their place in the new quality paradigm. Since anomaly detection becomes more accurate over time, engineers may find they rely on SPC less and less.

Automating root cause analysis

If a defect is not predicted, and then it is identified at a quality gate (such as an end-of-line test), the predictive quality solution links the defect with any possible anomalies in the signal data from that product’s production cycle. It generates a list of possible causes of the defect for engineering teams to review. Once the causal relationship is confirmed, the root cause is determined.

Next, the causal signals found can be isolated and monitored through the anomaly detection process described above. Engineers are alerted when the conditions that caused the previous defect occur again, so they can intervene immediately to prevent another quality gate failure.

Automating root cause analysis in itself is revolutionary for manufacturers. No longer do quality teams need to struggle with the decision to “sign off” on out-of-control processes, since performing the investigations and actually solving the problems pose less risk to production.

The challenges of Predictive Quality

No solution comes without its challenges. For predictive quality, the biggest challenge is its reliance on data. Limited data availability or poor data quality means limited predictive capabilities. Put another way, “garbage in, garbage out.” It is imperative for manufacturers to be strategic about the data they collect when implementing a predictive quality solution in order to get the most out of it. Luckily, a number of other Industry 4.0 products and applications are available to help with this part.

Adoption is a challenge not unique to predictive quality. Most new technologies take time to be fully adopted by teams who are used to doing things a certain way. Predictive quality offers the most value when it is used as a regular part of a quality team’s daily activities. This means that workers may need to change routines and habits that could be deeply ingrained.

Predictive Quality is the way forward

Like it or not, predictive quality is coming. Commercial predictive quality solutions are already available for a few specific industries, such as automotive. Manufacturers who get on board will find themselves in a competitive position, able to lower their costs through reducing scrap and rework, and increasing efficiency. They will reduce the risks of quality spills, which can cause untold reputational damage and financial penalties. And they will gain a deeper understanding of their manufacturing processes, which can drive process improvement or innovation.