Machine vision technologies for Vision Guided Robotics (VGR) have greatly enhanced the flexibility and capability of robots in many industrial applications, expanding the value of robots in markets ranging from automotive to food to pharmaceutical to warehousing/distribution/order fulfillment. In previous articles, we’ve discussed VGR concepts, implementation techniques, and key VGR algorithms. Now we’re going to take a closer look at some of today’s cutting edge components for VGR, and some of the applications that have been enabled by those technologies.

Developments in machine vision technologies have continued at a rapid pace over the past several years. There are exciting advances both in imaging components and processing software. It’s important to note that many of the new vision technologies are not exclusive to or even targeted for robotic guidance. However, the expanded capabilities have in many ways resulted in new or improved VGR applications. Machine vision provides a natural extension to a robot’s function, and as vision technology grows so do the applications in industrial automation that can be served by vision guided robotic arms.

How does machine vision fit in? VGR is a somewhat simple concept at the robot side. The arm only needs to receive a location from a vision system and then moves to that location to execute a task; pick up a part, place a part, paint, paint, weld, assemble, and so on. Unquestionably, there is complexity in those actions depending on the task. But ultimately the capability of the vision system to find the object or feature and then provide a suitable and accurate location to the robot completely drives the success of the application. Let’s look then at some of the latest vision/robotic technologies and related VGR applications.

Improving on 2D processing

Guiding a robot in “2D” means that the activity happens within a single plane. That is, the object or feature location provided to the robot is relative to a pre-defined real or virtual “flat surface.” Only the position relative to that surface can be reported (in “robot” movement, that’s the position in “X,” “Y,” and rotation of the object relative to the surface; no tilt or height information). Guidance using 2D grayscale imaging is estimated to be the most widely implemented VGR task, and is a valuable part of many flexible automation applications. And constant improvement in 2D machine vision technology has helped enhance capabilities.

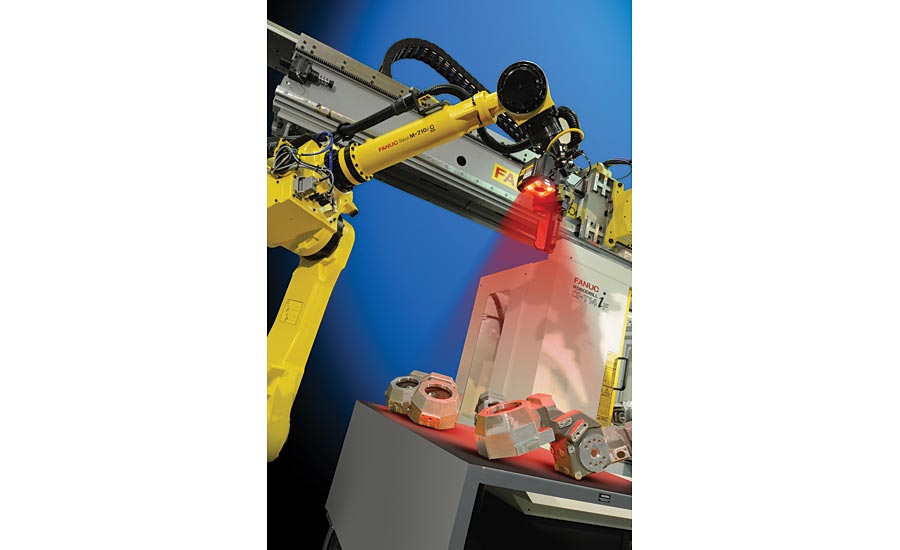

3D machine vision for VGR can help overcome the challenges of picking objects from random resting states. Source: FANUC.

More speed and resolution

Machine vision systems for 2D guidance and inspection have become both faster and more accurate. New imaging sensors pack more pixels into “standard” dimensions resulting in higher spatial resolution without changing, and sometimes reducing, the physical size of the camera package. Imaging speeds (frames or “pictures” per second) continue to increase. Processing speeds also have improved as computer processors become faster and more powerful, and as systems take advantage of GPUs (Graphics Processing Units) to offload complex vision tasks. While high resolution itself is not always necessary for location and guidance accuracy (see sidebar), the increased speed and pixel count certainly help improve reliability and reduce risk in a variety of 2D VGR applications.

Applications that incorporate inspection tasks with vision guided robotics can enhance application flexibility and efficiency. Source: FANUC

Image resolution comes into play primarily when very small features need to be located accurately in very large fields of view; a common situation in robotic machine vision since robots often work with larger parts in larger areas. If too few pixels cover a target feature there could be less reliability in locating that feature. From an integration point of view, the increased resolution could help reduce camera count and costs.

Within the context of locating smaller features, higher image resolution then becomes an factor in cutting edge applications in robotic guidance like material removal/deburring, grinding/polishing, “image to path” processing, and robotic inspection tasks where small feature detail is critical to the process.

Part tracking for pick from moving conveyors is a cutting-edge application where improved imaging speed and transfer rate and processing speeds can drive more challenging applications. When objects are on a conveyor in a constant stream, the machine vision system must constantly acquire and process images of the conveyor in order to locate all objects. Higher acquisition and processing speeds gives today’s machine vision systems the capability to handle higher conveyor speeds and more easily serve multiple robots in a single application.

Locating in the “real world” – 3D imaging

Guiding in 3D of course means that the location provided to the robot is a point which is in an orientation unrelated to any specific plane surface. This type of guidance is more “real-world” than its 2D counterpart since most objects, in the absence of intentional presentation constraints, can have multiple “stable resting states.” The potential for 3D machine vision technology for VGR is the elimination of any need to “pre-arrange” objects, even loosely, prior to locating and picking them with a robot, making the robot perform more “human-like” functions.

Collaborative robots will be a future growth area for VGR enabled applications. Source: FANUC

Randomly-oriented object location

With advances in 3D imaging, cutting-edge applications in guidance for randomly oriented objects are becoming more common. A standard term used for this type of application is “random bin picking” (although the pick of randomly-oriented objects outside of a bin/tote is still the same general application).

In recent years, the market has seen strong growth in the variety and availability of cutting-edge 3D imaging technology for VGR. These components utilize different (though somewhat standard) 3D imaging methods and related software to capture images and create an image (in varying formats depending on the component) of 3D points in space that represent the surface of the field of view. The technology has become more cost-effective, easier to implement, and in some cases more precise, making 3D machine vision for VGR a truly viable consideration for many applications that previously could not be achieved or made reliable with 2D imaging.

Differentiating between the 3D imaging components can be difficult, and that topic is broader than what we can cover here. However, some key considerations would include:

Illumination/imaging technique. 3D systems often use active illumination to create “texture” in the image. Different illumination techniques exist in the market along with varying imaging concepts, and a certain one might be best suited for a given application or part type.

Speed of acquisition and image resolution. Some systems create the 3D image from a single capture. Others use multiple images with varying illumination patterns in order to possibly achieve better accuracy. These factors determine the speed at which the 3D image is produced and to an extent, the resolution.

Processing software. Ultimately, the system must be able to locate the randomly oriented parts in a way that provides a suitable grip option for the robot. The real component differentiation is in the software suite used to perform this task. While ease-of-use can be of interest, the diversity of available software tools often is the real key to the success and reliability of the application. For example, CAD-trained 3D model matching is a popular and widely implemented search tool, but used alone is not always sufficient for all applications

Mixed-part pick

One of the most challenging cutting-edge 3D VGR applications is that of handling mixed objects that are randomly oriented in a bin, stack or pile. This type of application is most notably found in VGR for warehousing and distribution, order fulfillment, shipping and mail processing. In 3D processing, the biggest difficulty is in identifying a surface on each different object that will allow the part to be removed from the bin or stack by the gripper technology targeted for the applications. Despite its challenges, this futuristic-sounding application is one that is realistic now in many cases, and soon should be more broadly implemented in practical use.

Grasping gripper technologies

While this technology is not machine vision, the impact of the advancement of gripping components ultimately is critical in enabling VGR applications. In many 2D guidance tasks, the gripper design must ensure that the act of picking the part does not affect the location accuracy provided by the vision system. In 3D applications, the gripper must be flexible enough to grasp the object on multiple surfaces with the part in random orientations, and perhaps be able to capture an extremely wide variety of part types.

Resolution and Accuracy in VGR

While many quality-related inspection tasks depend on individual point information, the types of machine vision algorithms that perform location for VGR often process many edges or points (tools like pattern matching or particle analysis). Because so many pixels (or edges) are involved in the math, these tools usually produce better sub-pixel repeatability, making the additional pixel count unnecessary. When doing location with objects and/or features that are quite large in a field of view, usually a lower resolution camera could be sufficient for VGR.

Grippers are emerging in the robotic marketplace as a standalone cutting-edge component. Recent developments include “smart” grippers whose movements can be programmed at run time for each part; flexible grippers with soft, tactile fingers; grippers that mimic the action of human hands; and even grippers with “intelligence” that can learn how to best grip a given object. The continued growth of gripping technology is important to achieving truly flexible and adaptable VGR applications.

Finding “invisible” features or objects

A lot has been said recently about “non-visible” imaging in machine vision. (Non-visible is somewhat of a misnomer; it just means things that are not normally visible to the human eye, but that can be imaged by other means.) Multi-spectral and hyper-spectral cameras can differentiate features or objects based on the “spectral response” of the item by evaluating very narrow color wavelengths that the human eye cannot uniquely distinguish. Near infrared (NIR) and shortwave infrared (SWIR) imaging use light wavelengths that are invisible to the human eye, but which interact with some materials to highlight features that otherwise would not be visible. The application base for these types of imaging is widespread in industries like food processing, pharmaceutical, medical, and packaging. And these cutting-edge machine vision components are valuable in applications for robotic guidance. In most cases, the robotic task is inspection and/or 2D guidance. Take, for example, an application to detect and robotically remove debris (sticks, stems, dirt, stones) in a continuous stream of food product such as nuts or fruits. Using hyper-spectral analysis and robotic guidance, this type of application can be fully and reliably automated.

Collaborating with humans

Perhaps the biggest buzz in robotics is collaborative robots. This valuable and relatively new technology was jumpstarted in part by regulations and specifications (driven by the industry) that provide direction as to how a robot can safely work alongside a human worker without external guarding or other restrictions, and without causing physical harm. The collaborative robot market reportedly is one of the fastest growing in industrial automation. And when one considers a robot that works more closely with a human, performing more human-like functions, it’s clear that machine vision guidance can play an even more important role in this technology picture.

Guidance for collaborative robots can sometimes be described as the “simple made difficult.” Since the robot operates in the same “space” as a human, the machine vision often must address the same variability in environment and process that a human worker does. Overcoming this variability can make a machine vision task that is relatively simple when tightly constrained much more difficult. Machine vision for collaborative applications can expect to have broad variations in lighting, less dedicated illumination due to operational clearance requirements, and greater part presentation variation. 3D imaging technology is a good match for collaborative applications; however, today’s industrial 3D components still have some limitations when it comes to providing completely flexible general purpose vision for a collaborative arm.