Whether an imaging system measures dimensions, verifies colors, or determines shape, the purpose of machine vision is to distinguish an object from its background. Engineers incorporating machine vision into a production environment investigate camera sensor specifications in great detail, selecting a camera based on sensor size, data rate, and communication protocol. Engineers give equal attention to selecting the proper lens, evaluating such factors as field of view, light throughput, and optical resolution. While these factors are necessary to ensure the performance of a vision system, an often-overlooked, yet crucial aspect of vision is illumination.

Know Your Object

A camera sensor and lens must be matched to application-specific system requirements, and illumination is no different. Different illumination methods will have varying effects on contrast depending on the properties of the imaged object and background. Thus, it is imperative to understand the characteristics of the object and background. Common distinguishing features of the object and background, which contribute to selecting the correct illumination method, include: dimensions, the presence of surface asperities (e.g. holes, pits, etc.), and material properties (e.g. texture, color, specular reflection).

When measuring dimensions of an object, information about the object surface is typically disregarded, considering only the outline. The texture or surface properties can be useful for separating object from background. The texture of the surface determines how brightly the light will reflect off the surface, and in which direction it will reflect. All surfaces are on a scale from specular, meaning mirror-like—or reflective—to diffuse, meaning light-scattering. Color also affects the wavelength of light reflecting from a surface.

Knowing how light scatters or reflects off objects allows machine vision engineers to strategically isolate bright objects on dark backgrounds or dark objects on bright backgrounds. Darkfield illumination occurs when the light source is at a shallow angle to the object and is useful for detecting rough surfaces on smooth backgrounds, but can also cause pronounced shadows if the object has depth or structure. Brightfield illumination occurs when the light source is at a very steep angle from the object and is used to make specular objects bright and diffuse objects dark, while causing less shadowing for surfaces with depth.

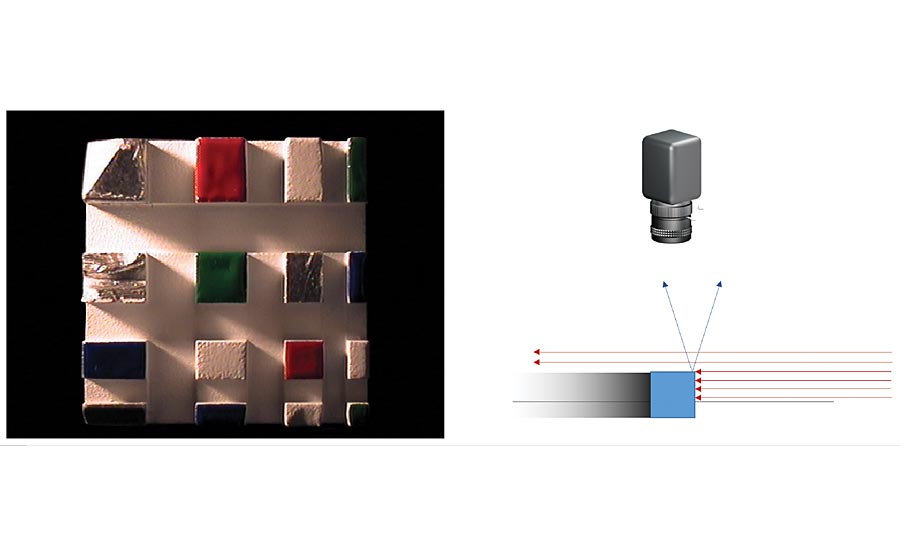

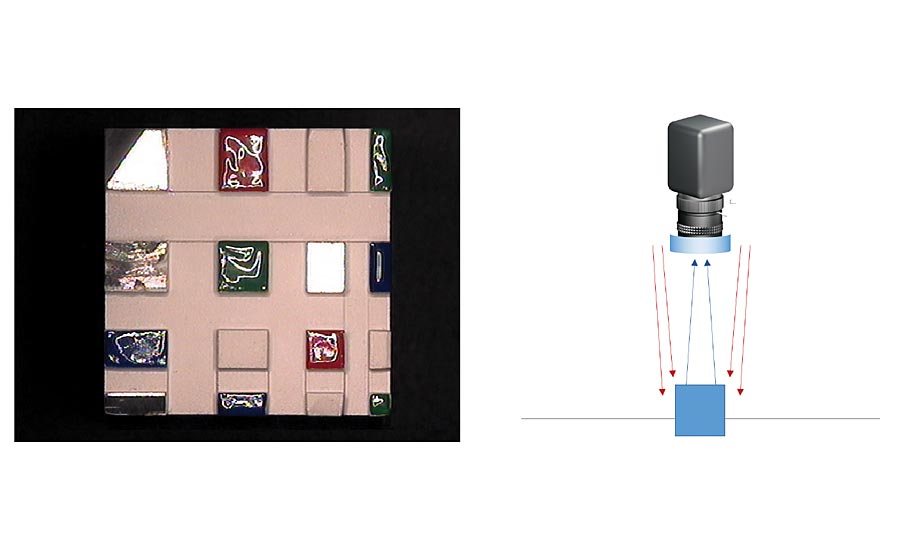

Figure 2. Glancing illumination uses a light source positioned at a near 90° angle to the optical axis so that light rays scattered from the object at stark angles are directed toward the camera creating a darkfield illumination environment.

Types of Illumination

One lighting technique is called directional brightfield illumination. Directional illumination uses a single or multiple light sources positioned such that the light rays are traveling in a single direction at some moderate angle with respect to the line-of-sight of the camera and brightfield illumination refers to the background of the object being brighter than the object (Figure 1).

This corresponds, for example, to standard orientations one might use to capture casual images with a cellphone: the light source being the sun, shining above and behind the photographer, distributed evenly on the subject. This imaging geometry is straightforward in setup and resulting photos show object detail but hide or obscure features in shadow or with glare. The same characteristics apply in machine vision.

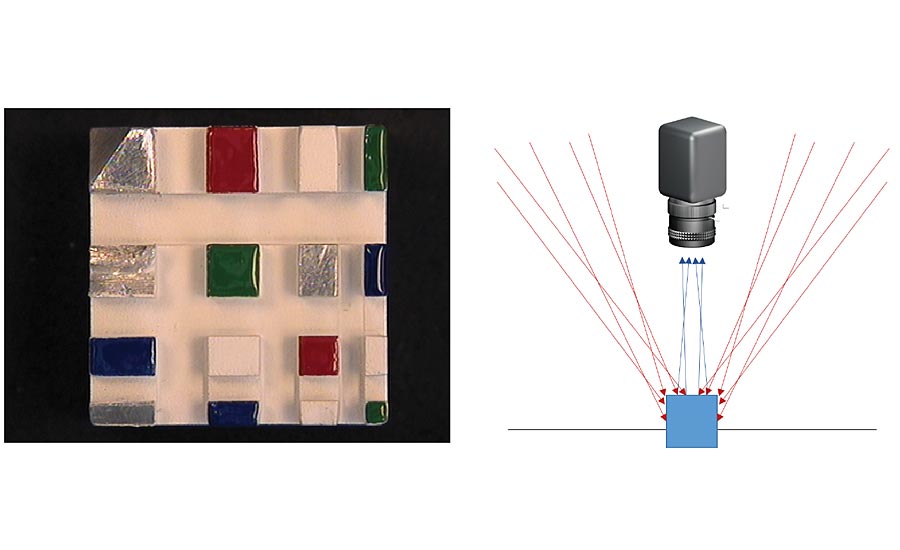

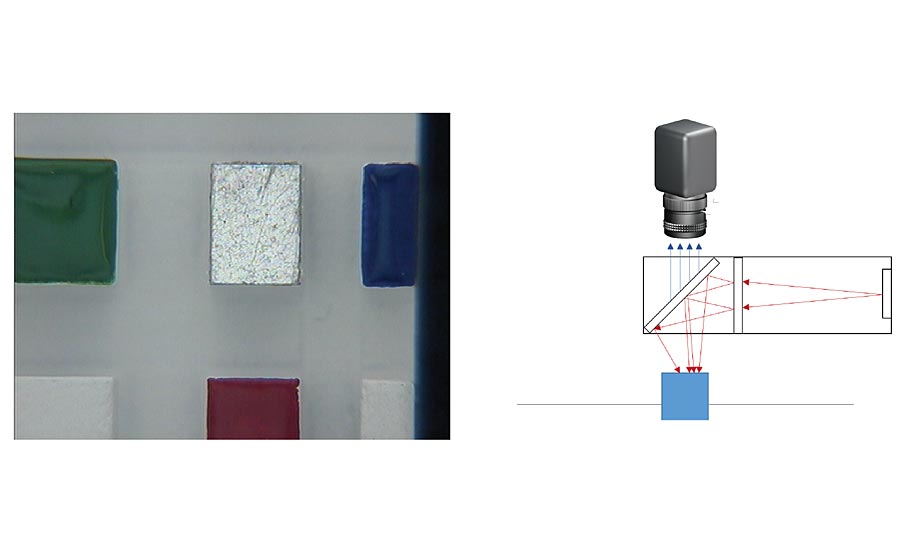

Figure 3. In diffuse illumination conditions, light rays from many angles (and potentially from many sources) strike the object and reflect in many which ways, such that much of the reflected light enters the camera.

Directional darkfield illumination or glancing illumination places the light source at close to 90° from the line-of-sight so light rays rake across the object, putting topographical differences into sharp relief. As such, the background of the image appears darker than the object, utilizing an illumination environment referred to as darkfield illumination (Figure 2).

This sharp relief creates shadows and hotspots that obscure detail. However, glancing illumination is useful for finding asperities (pits, rough edges, or bumps) on objects that have otherwise smooth surfaces.

Diffuse illumination uses light from many directions, reducing shadows and glare. Diffuse illumination can be brightfield or darkfield, similar to directional illumination; or a mix of both. However, when diffuse illumination is both brightfield and darkfield, additional contrast is not gained on specular or diffuse objects as both will appear similarly bright (Figure 3).

Figure 4. Coaxial lighting is typically achieved by using a ring light, which directs most of the light rays down the optical axis and onto the object, which reflects the light back up the optical axis.

Because clouds scatter light from the sun, photos taken on cloudy days appear diffusely lit. Diffuse illumination is best suited for large, reflective objects and can be achieved with many distant non-directional light sources, such as a ceiling full of linear fluorescent fixtures.

Coaxial illumination can be provided by a ring light around the lens, which directs light down the line-of-sight. This is a form of diffuse brightfield illumination (Figure 4).

Diffuse axial illumination is similar to coaxial illumination in that light is sent down the line-of-sight, but generally entails a more complex setup, with a beamsplitter in front of the lens. This is also a form of diffuse brightfield illumination (Figure 5).

Both techniques are exceptional at providing even illumination at well-defined working distances, with coaxial illumination being superior for matte surfaces while diffuse illumination works better for reflective objects. Because shadowing is nearly eliminated, direct illumination techniques are best applied to objects with starkly different surface properties such as color or reflectivity.

Achieving high image contrast is, of course, the main objective of most imaging applications. An image must have significant differences between, for example, product edges and the background, the demarcation between a liquid level and the air in the empty container above, or text of a label and a printed pattern. Additional, more specialized, illumination techniques such as collimated, in-line, or telecentric illumination, enhance the contrast of edges or defects in translucent or transparent objects. In these cases, and many others, varied illumination techniques can help. There are other tools however, which improve contrast in machine vision that have been adapted from human vision: distinguishing color.

Figure 5. Diffuse axial illumination entails illuminating an object from above, via a light source at a 90° angle to the optical axis, shining through a beamsplitter, such that the reflected rays propagate upward, through the beamsplitter, to the camera.

Using Color to an Advantage

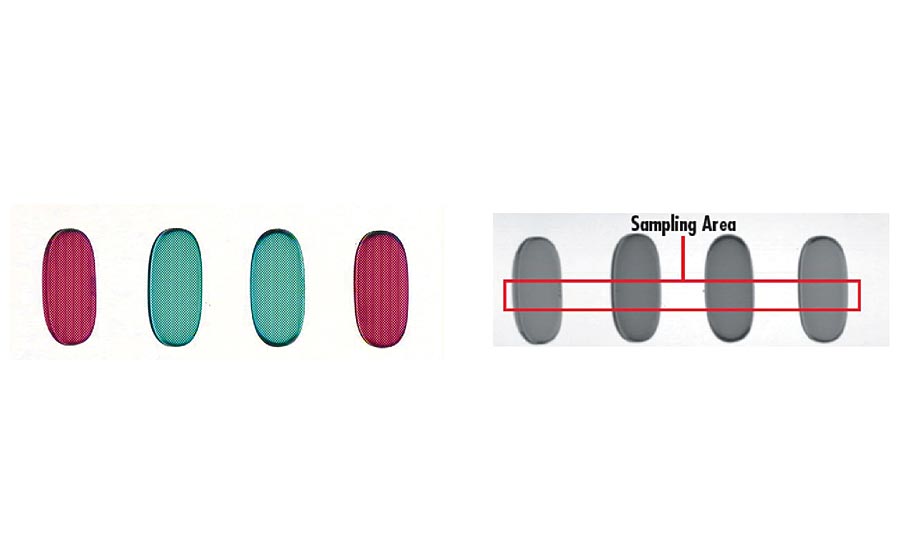

Contrast can be increased by separating colors of objects and backgrounds and does not require a color camera to benefit. By discriminating which wavelengths are transmitted to the sensor, using filters, high contrast monochromatic images can be obtained (Figure 6).

Colored illumination can be used both in backlighting, for transparent objects, and in front lighting, for opaque objects. Illuminating a colored object with light, which is the same wavelength as the color of the object, will usually make the object appear bright, while alternatively colored objects will typically appear darker.

Polarizing Light to Reduce Glare

Color is not the only property for which light is differentiated. Light and its polarization state is manipulated using polarizers to reduce glare, improving image contrast. In general, light originating from a source such as the sun or a lightbulb has no specific polarization state. By using a polarized light source and a linear polarizer—rotated such that its axis of polarization is 90° from the axis of the light source—in front of the lens, both the light that is directly from the source and the light reflecting off a specular surface will not enter the lens. Polarized light, scattered from diffuse surfaces, will still partially pass through the crossed polarizer and lens. This property of light is used in a way similar to darkfield illumination to make specular objects darker and diffuse objects brighter, but is done from steeper angles as to not cause shadowing problems, common with darkfield illumination methods.

Figure 6: Adding a green filter to a monochromatic machine vision system inspecting red and green pills greatly enhances the contrast between both types of pills.

The Lighting for Your Application

Consider, for example, a situation where workers sort yellow and blue blocks as they move down a conveyor belt. Yellow blocks get pushed up and blue blocks get pulled down. You are tasked with improving the efficiency of the process using machine vision. You put a monochrome camera above the conveyor belt, but glare from the reflective plastic surfaces obscures the signal. In addition, the blue and yellow blocks reflect roughly the same percentage of incident white light, so there is little contrast with which to work. You could be tempted to resign to continuing the slow, inefficient manual process, but do not want to give up on the economy and speed of a machine vision solution. Rather than looking at the lens or sensor, consider modifying the illumination.

You could redesign the lighting above the belt to make the illumination more diffuse, then add a blue bandpass filter to make the yellow blocks significantly darker than the blue. That might work, but since the conveyor belt confines the blocks to a given plane, and you are not interested in surface features or roughness, you have the option of coaxial illumination. A polarized ring light coupled with a polarizer in front of the camera will reduce glare. The color filter will still come in handy for enhancing contrast.

Your specific application may require a different combination of illumination methods and filters or polarizers, but most certainly the performance of a machine vision system is enhanced by giving as much attention to lighting as the selection of a lens or sensor. V&S