Three-dimensional (3D) imaging applications are used in many different industries ranging from both industrial pick and place, palletization/depalletization, warehouse, robotics and metrology applications to consumer-based products such as drones, safety and security, and patient monitoring applications. No one specific type of 3D technology can solve all these different types of applications and the features of each must be compared as to their suitability for each application.

3D imaging systems can be classified into both passive and active systems. In passive systems, ambient or broad fixed illumination is used to illuminate the object. Alternatively, active systems use various methods of spatially or temporally modulating light including laser line scanning, speckle projection, fringe pattern projection, or time of flight (ToF) scan 3D objects. In both passive and active 3D imaging systems, reflected light from the object being illuminated is captured, often by a CMOS-based camera, to generate a depth map of the structure and then, if required, a 3D model of the object.

Time-of-Flight

While surface height resolutions of better than 10µm are achievable using laser scanners at short working distances, other applications demand longer range. For example, applications such as navigation, people monitoring, obstacle avoidance, and mobile robots require working distances of several meters. In such applications, it is often simply necessary to understand if an object is present and measure its position to within a few centimeters.

Other applications such as automated materials handling systems operate at moderate distances of one to three meters and require more accurate measurements of about one to five millimeters. For such applications Time-of-Flight (ToF) imaging can be a competitive solution. ToF systems operate by measuring the time it takes for light emitted from the device to reflect off objects in the scene and return to the sensor for each point of the image.

For applications such as automated vehicle guidance, LIDAR scanners can be used to produce a map of their surroundings by emitting a laser pulse which is scanned across the device’s field of view (FOV) using a moving mirror. The emitted light is reflected off objects back to the laser scanner’s receiver. This returned information contains both the reflectivity of the object (the attenuation of the signal) and time delay information that is used to calculate the depth through ToF.

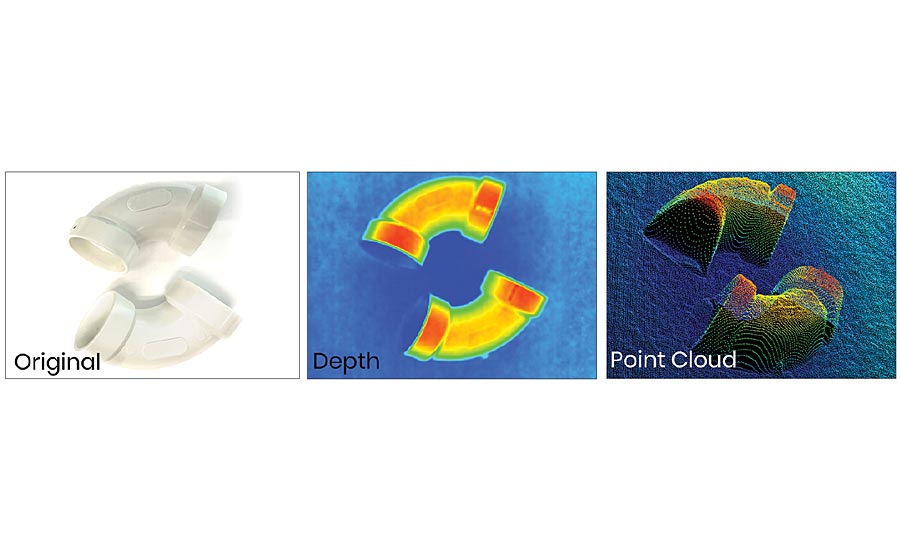

In both passive and active 3D imaging systems, reflected light from the object being illuminated is captured to generate a depth map of the structure and then, if required, a 3D model of the object.

Pulsing or Waving

ToF cameras use one of two techniques, pulse-modulation (a.k.a. direct ToF) or continuous wave (CW) modulation. Direct ToF involves emitting a short pulse of light and measuring the time it takes to return to the camera. CW measurement techniques emit a continuous signal and calculate the phase difference between the emitted and returning light waves, which is proportional to the distance to the object. Phase-based devices are available from several companies today.

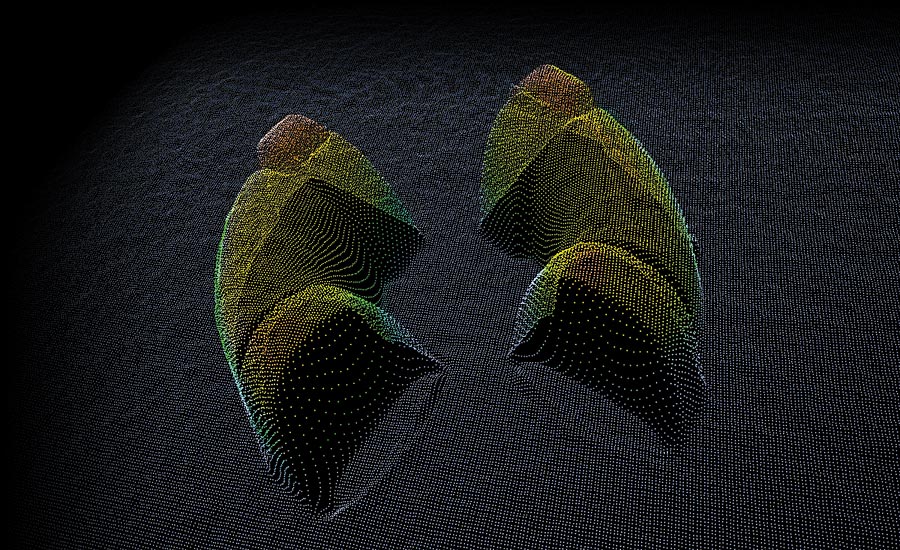

The point cloud data can be transferred over the camera’s GigE interface and further processed using software.

Conclusion

Time of flight (ToF) technology such as Sony’s DepthSense sensor used in machine vision cameras present a new set of opportunities for those developing 3D imaging systems. First, because the system only uses a single camera, no calibration is required by the developer. Secondly, the system is much less affected by adverse lighting conditions compared to traditional passive stereo. Thirdly, the camera outputs point cloud data directly, offloading processing from the host PC. Lastly, the system is relatively low-cost being less expensive than high-performance active laser systems and comparable with projected laser light stereo systems. V&S