The key to accurate, reliable leak testing is having a repeatable calibration. Proper calibration of your test instruments is key to reducing the risk of costly and time-consuming scrap and rework costs due to incorrectly passed or failed parts.

For an accurate test result, it is essential to eliminate any environmental factors that could impact the reliability of the pressure measurements during your leak test. This is where calibration comes in.

One common alternative to calibration is to measure pressure drop over time, followed by conducting validation studies to prove that a certain pressure drop over time isn’t associated with a leak in the parts being tested. Once you identify a known leak rate, you can correlate that to other parts on your line that are designed similarly but have different volumes. However, this process is quite time consuming and ultimately not very accurate, especially when accommodating several parts on one line with different volume and design factors to consider.

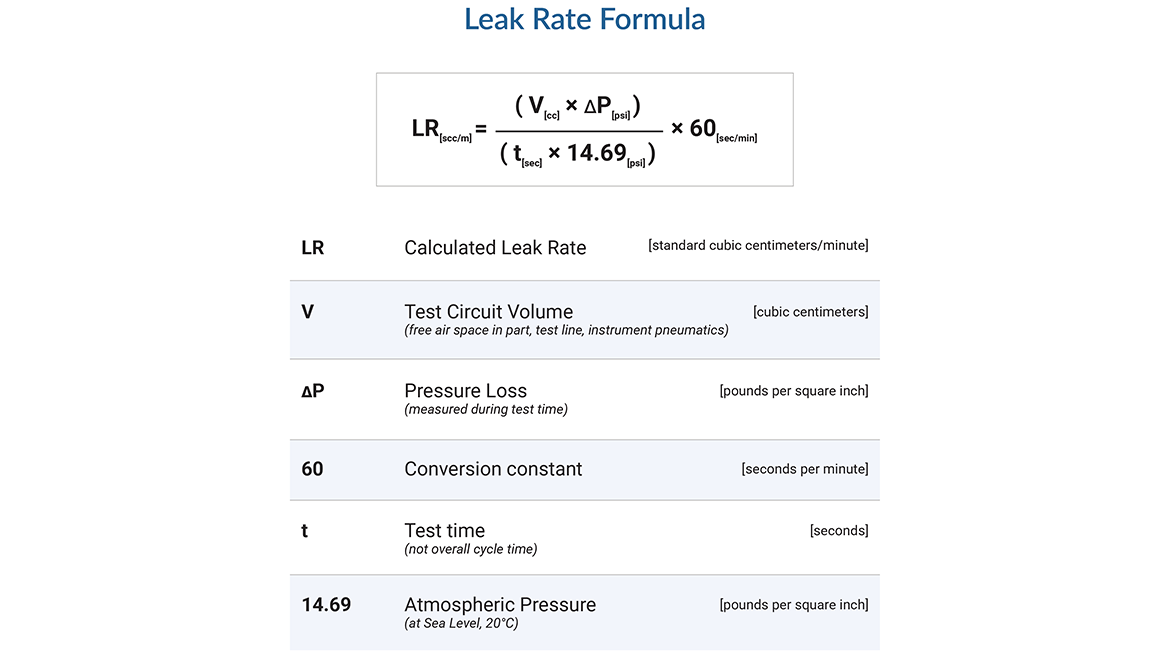

Most testing environments contribute some noise into a testing process in the form of ambient temperature, atmospheric pressure, and test air temperature. A good calibration process will allow you to accurately identify “leak measurements” in the specific environment you are testing. Instead of just measuring a pressure drop over time, which is dependent on part characteristics like volume, temperature, and atmospheric pressure, calibrating to a known cc/m leak rate (see leak rate formula) removes all the variables that could affect your test results to ensure you are always measuring for and shipping good quality parts.

BENEFITS OF TWO-POINT CALIBRATION VS. SINGLE-POINT CALIBRATION

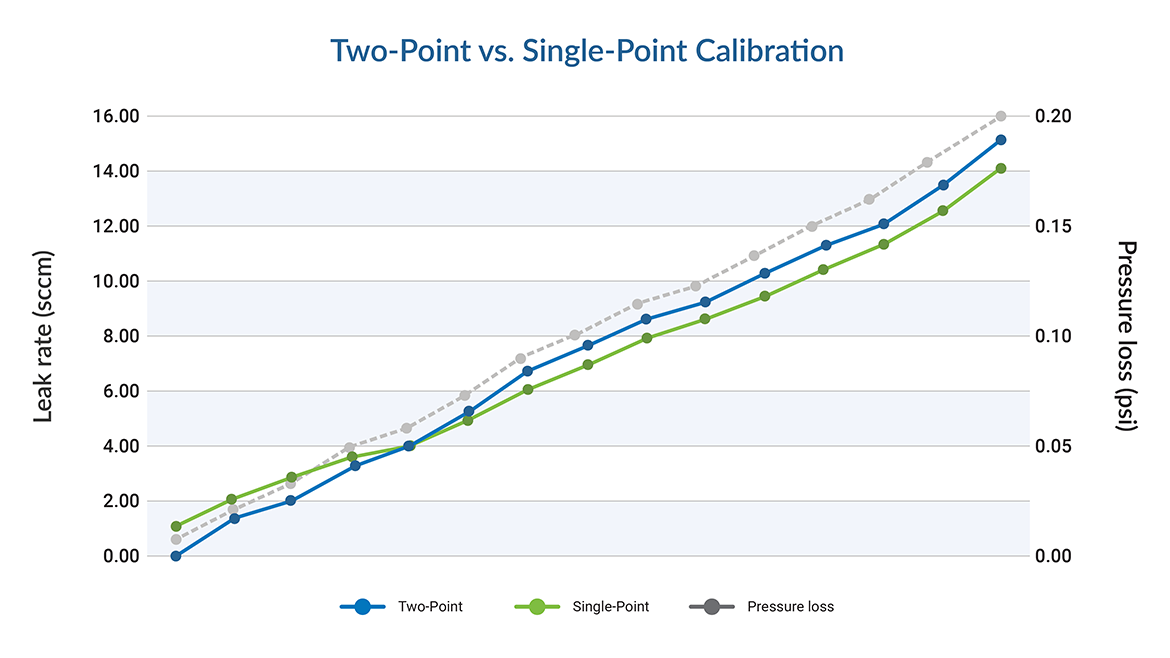

Some manufacturers will use a single-point calibration system to measure volumetric flow—however, a two-point calibration provides a much higher level of accuracy.

Single-point calibration process

A single point calibration process sets an arbitrary zero pressure change related to a 0 cc/m flow rate (non-leak), regardless of environmental factors, then calibrates your instrument to a known flow rate (reject) or leak standard. It only uses this one calibrated point as reference. Using this method, the further you get away from that set flow point, whether it’s an increase or decrease in pressure change, your test results become less accurate.

Setting an arbitrary zero doesn’t take into account the expansion and contraction of your part, thermal events that occur during compression of air, or atmospheric pressure at zero, which can be different depending on geographic location of your plant, etc. Not to mention that if any aspects of the process change from test to test, even something as simple as the test line length or the temperature of the tested parts, the test results will be far less reliable.

Two-point calibration process

Two-point calibration introduces an additional step into the calibration process. First, a leak-free master part is subjected to a standard decay test as a control, measuring for normal pressure decay. This identifies a pressure drop to a non-leaking part as an accurate zero point, because everything—even a leak-free part—will have a pressure change during this process, whether it’s a thermal event, expansion/contraction, or part characteristic driven.

After a period of relax time, the same test is repeated with one key difference: a leak standard (at a known leak volume at your reject set point) is placed into the test circuit, allowing the pressure in the test part to bleed out through the leak standard. This results in a larger pressure drop in the second test, as we are intentionally letting pressure out at a known leak or “flow” rate.

This identifies two pressure drop values: the control value (the expected pressure drop for every like part tested in this atmosphere), and the larger second value (with additional controlled pressure loss from the leak standard). The difference in these values is equal to the flow rate of the leak standard (also known as DP from the formula above). Knowing the difference between these two pressure drop values, we can separate out all the variation that comes from a normally pressurized part. Everything measured above the known leak reject set point is assumed to be leak related, identifying a failed part.

TWO-POINT CALIBRATION HELPS MAINTAIN QUALITY STANDARD ACROSS PLANTS

Another benefit to using two-point calibration is you can use this to correlate those leak rates with all plants because you’re controlling for environmental factors. Using two-point calibration, you can reliably correlate testing in one atmosphere to that of another.

Without this method, it’s hard to correlate from plant to plant because you’re only comparing your results to one standardize point across the slope of all measurements. Atmospheric pressure differences in different locations can introduce variation for accurate comparison using single-point calibration methods.

BENEFITS OF USING LEAK STANDARDS VS. REFERENCE PARTS FOR CALIBRATION

It’s always more accurate to use a leak standard for calibration instead of a reference part with an inherent leak. The trouble with calibrating with known leaking reference parts is that it’s difficult to create a part that replicates a marginal pass/fail leak rate (unless you mount a leak standard into a known non-leaking part). Variation due to the flow characteristics of a leak path within a part can affect the accuracy and repeatability of the measurement. Ultimately, leaking parts are not repeatable, and the goal is to achieve accurate, repeatable calibration. That is why we suggest using leak standards instead. Leaking standards are designed to have a repeatable laminar flow compared to the turbulent flow of a part leaking due to porosity or a damaged seal. This process will ensure a repeatable calibration for a closely controlled production process.

REGULAR CALIBRATION VERIFICATION IS IMPORTANT TO MAINTAIN TEST ACCURACY

Recalibration is recommended on a regular basis to ensure the continued accuracy of your leak test results. Depending on your testing instruments and the types of parts, manufacturers may choose to recalibrate their instruments annually, monthly, weekly, or even with every operator shift change.

However, running a full recalibration process at a frequent rate may pose problems with your manufacturing process, as it can be time-consuming, create bottlenecks, and operators must be trained on proper calibration methods. Instead, calibration verification can be incorporated into your process to ensure consistent accuracy with less of a time commitment and learning curve.

To conduct calibration verification, you need a known good part separate from your master part. First, you plug in your known good part to a leak standard or external connection that is 10-20% above your reject rate. This will produce a failed result and give you a measurement that should read close to the leak standard measurement to verify your calibration accuracy. Second, you’ll plug in a leak standard or external connection that is 10-20% less than your reject rate. This will produce a passing result and again allow you to verify the flow rate reading with that of the leak standard.

The above methods of two-point calibration and calibration verification provide a strong, reliable process for verifying that your leak test instruments are always properly calibrated and ensuring you are always producing good quality parts on your line.