At the most fundamental level, laboratory instruments can be understood as simple data generators that convert physical observations into numbers. In the last few decades, we have witnessed a transformation of laboratory data production and management schemes; what used to be characterized as an analog output and paper-based storage of essential data has evolved into what is commonplace today: digitally output, server-based storage of incomprehensible amounts of data.

On the surface, this evolution should be wonderful. We have all been in meetings where the only logical path forward is to collect more data to address an area of uncertainty, and it’s only natural to think that more data will yield more meaningful insights. But experience teaches us that this is only true if the right processes and tools are in place to effectively distill raw data into analysis that leads to smart, agile business decisions. The words “effectively” and “agile” cannot be overstated, as they stress the value that the relevant processes and tools must be easy and efficient to use. The time and effort required to get from raw data to decision making can be the difference between leading or lagging behind your competition.

Investing in instruments and acquiring more data more accurately is relatively straightforward. But the next, all-important step is not as simple: congregating data from various instruments for efficient analysis to help your company make informed business decisions. Unfortunately, to get this right, there are no catalogues to buy from or rule books to reference. This is because no two labs are the same - they have different instruments, different operational scales, different inputs and outputs, and different standards for quality and traceability. Also, the data management system used by a lab often interfaces with other business platforms, such as the company’s MES (manufacturing execution system) or ERP (enterprise resource planning) systems. In most cases these differences necessitate some level of customization when a data management system is being implemented.

Let’s use an analogy: envision an orchestra playing a symphony. The conductor is commanding the stage and the musicians to perform harmoniously; not one note is out of place. Now let’s envision the very same stage and instruments, but replace the trained musicians with a bunch of pre-teen children with no supervision. What was once soothing and magnificent is now an unbearable cacophony.

This orchestra and its musical instruments can be equated to your laboratory and its test instruments. Rather than sound, you have data and numbers being produced by many different instruments, all at the same time. In order for your laboratory to operate in harmony and generate meaningful information for your business, a data management system must facilitate coordination of the instruments.

Harmonizing your laboratory’s data management practices requires effort and investment, and those who master it are poised to win in a competitive market. Here are six trends that the most efficient labs are adopting in order to achieve a competitive edge.

Having a centralized data management system in a materials testing lab is critical for streamlining data collection and analysis. | Source: Instron

1. Lab Equipment Should Be Connected To A Centralized Database

As data production continues to grow, the automation of data collection to a centralized database is becoming more important than ever. Manually transferring test data and results from an instrument to a database for storage and analysis is not a value-add process. Almost all the trends that will be discussed involve the elimination of these non-value-add activities that can drain resources and degrade efficiency.

Depending on the function of the laboratory in your organization, data could be getting stored in various places, such as a LIMS (laboratory information management system) for your own purposes or an MES (manufacturing execution system) that monitors production efficiency and quality. Either way, when evaluating test instrument suppliers, be sure to ask about their ability to integrate with your existing systems. You will find that some suppliers will encourage this integration while others shy away, instead pointing you to their own software ecosystems of data management. While these supplier-specific solutions may work well within their product portfolios, they may also create barriers to achieving a seamless, centralized data management structure. When evaluating a capital equipment purchase, it is always recommended to engage your IT resources to understand their preferences in order to ensure a clean integration that can be easily maintained. Data security practices such as backups, archiving, and user management become exponentially easier for you and your IT team when data is stored centrally.

2. Bi-Directional Data Transfer Maximizes Timesavings

Bi-directional data transfer is a superior implementation of connected test instruments. When most people think of data transfer, they are imagining the uni-directional output of data. For instance, a measurement scale is connected to a computer to reduce manual data entry. An export button can be pressed on the scale to trigger the transfer of its indicated mass reading to an associated field on the computer. This is one-way (‘uni-directional’) communication. Two-way (‘bi-directional’) communication is more commonly used with complex instruments that require multiple inputs prior to the associated testing operation. For instance, a materials testing system may require inputs identifying the sample under test, its production batch number, the test type controlling the test system, and dimensional values to characterize the resulting material properties. Once the test is complete, the data and results must be transferred to a LIMS table associated to the sample. Bi-directional communication between the materials testing system and the LIMS automates both the input and output of data, eliminating the non-value-added time and risk of human error associated with manual data entry. Connecting your instruments and establishing bi-directional communication can save five to 15 seconds per entry, which can add up to an hour or more per day in higher volume laboratories.

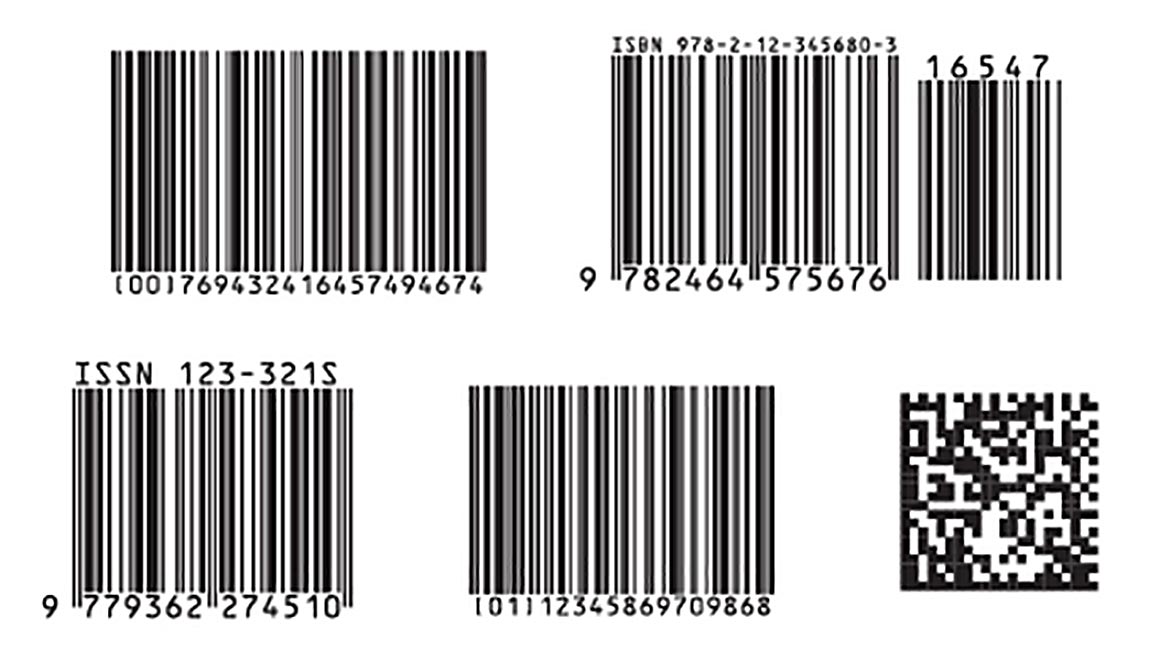

Example of linear barcodes and 2D barcode (bottom right). | Source: iStock images provided by Instron

3. 2D Barcodes Are The Future

Barcodes are the most common automated mechanism used to digitally transfer data associated with a test sample to an instrument or database. For decades, one-dimensional (1D) or linear barcodes have been universally adopted by many industries for identification applications. In recent years, two-dimensional (2D) barcodes have begun to replace linear barcodes, as they have several strong advantages. First, 2D barcodes can hold more data while occupying a smaller footprint. For reference, a 2D barcode can contain 2,000 characters while 1D barcodes can rarely accommodate more than 25 characters. Their small size and data storage capacity mean they can be used for applications that were previously unfeasible, such as small medical devices and vials that typically require stringent identification tracking.

When evaluating test instrument suppliers, it is worth asking how their software applications handle barcodes. Whether or not barcodes are currently implemented in your sample identification process, it can pay dividends down the road to have an instrument that can not only support barcode scanned keyboard emulators, but also more enhanced barcode-driven workflows to reduce manual operation of the user.

4. Instrument Maintenance Logistics Should Be Automated

It is essential to manage maintenance and calibration visits to keep your test instruments in working order. But why spend so much time coordinating the logistics around these service visits when you don’t need to? More and more instruments employ telemetry data to automate the scheduling of routine services and even predict part failures to preemptively respond, limiting system downtime.

5. Remote Management Is Expected

Access to your lab’s data should not be confined by the walls of your laboratory. Whether you are working from home and would like to check the status of a test or if you need to share data with a colleague, remote access to instrument data has become an expectation. One of the inherent benefits of centralizing data in a LIMS or the like is that they are typically designed in a client / server architecture where users and instruments (‘clients’) read and write data stored on the central database (‘server’). Clients come in a variety of forms, such as desktop applications or web services, so when evaluating an instrument supplier it can be important to ensure that their remote management platforms align with your team’s intended use.

6. Cybersecurity Must Be A Priority

As the volume of data grows and its storage moves from on-premises to cloud-based servers, the risk of malicious data breaches inevitably increases. While centralized data management makes organizations run more efficiently, operations can come to a standstill if these systems are not properly secured. Cybersecurity vulnerabilities can exist in any link of the chain, so it should be a topic of conversation not only in regards to the larger data management infrastructure but also with each instrument that is intended to be integrated into the network.

With the volume of test instrument data continuing to grow, laboratories need solutions that will make sense of this data efficiently and effectively so that business decisions can be made. New trends such as centralized databases, bi-directional data transfer, 2D barcodes, remote management, automated maintenance, and robust security are being employed by laboratories to help improve the management of their data. By prioritizing tools like this and their overall data management processes, laboratories will gain a competitive edge in an ever-evolving industry.