I’ve been working with artificial neural networks (ANNs) and machine learning (ML) since the late nineties when I was in graduate school. ML and ANNs are subsets of the world of artificial intelligence and include various algorithms and programming techniques that date back to the dawn of computer science.

Since then, I’ve deployed ANNs and ML algorithms in real-world applications involving robot calibration, nonlinear coordinate transformations, sensor calibration and linearization, optical character recognition, 3D object recognition, and item recognition in image processing applications.

Recently, I’ve also gotten back into academia and am teaching ML and Python programming at SUNY Plattsburgh, where I’ve had the good luck to be teaching again just as Large Language Models (LMM) like ChatGPT have burst onto the scene.

Is ML Useful In Integration?

You may (still) be wondering whether any of this stuff is actually useful in real-world integration and factory automation scenarios. My own experiments have resulted in some insights, some laughs, some frustration, and some rules of thumb about how to go about making these interesting software development techniques useful in a variety of applications.

Much of the media attention in this space has been paid to human-like interaction and image classification. Most of our readers are interested in factory applications and automation where we’d prefer to reduce human interaction. Furthermore, a very large number of articles (and dozens of enthusiastic start-up companies) have focused on 2D and 3D object recognition primarily in the robotic mixed bin picking space. I’ll skip these—mixed bin picking is less of a computer vision problem and much more of a gripper design and robot speed/price/performance design effort that doesn’t necessarily benefit from much AI. Robots need to move things accurately and quickly, and that just turns out to be mostly a mechanical engineering game.

AI Expectations And Limitations

I’ve always divided the factory world into what I call the carpet and the concrete. Up near the front door, there are comfortable, fancy carpeted offices and copious whiteboard space that let us hypothesize and imagine things to be easy, simple, and inexpensive. Out back, however, there’s a huge expanse of concrete floor with machinery bolted to it and fork trucks bouncing around and racing associates on tight time schedules. Back there, everything seems hard, complicated, and costly.

AI and ML algorithms that train on sample data are generally considered successful in academia when they achieve 90% accuracy. Getting to 98% accuracy is almost unheard of. But our readers know that QC and QA systems need to be much more reliable than the actual processes they monitor—and our modern processes may be 99.99% or more reliable. So, there is a basic mismatch between the capabilities and expectations of the AI/ML computer science community and our factory automation and quality needs. Many startups are learning this the hard way.

Where Can We Use It, Then?

Yes, there are places for ML in our automation world! When I teach ML for robotics applications at the university level, I start by showing students how to use neural networks in TensorFlow or PyTorch, or even in low-level C-code, for something very simple, like converting back and forth between Celsius and Fahrenheit. Now I know, to be sure, this isn’t an application that requires ML. It’s a very simple linear mathematical function that we all typically learn in middle school. But to see how we can deduce the precise formula without needing to deduce a formula of any kind can give us some insight into the utility of ML.

We next graduate from simple linear problems to using a neural network to solve the Pythagorean Theorem: that is, if I tell you how long two sides of a right triangle are, can you tell me how long is the hypotenuse? And also tell me the perimeter and area of the triangle. Again, these are very easy things to calculate without ML, but the point is to be able to get the right answer and to be able to generalize without ever having to derive or know what the formulas are. This is what is called “learning from data.”

Real Things Aren’t Linear And Inputs Aren’t Independent

Where the power of this becomes obvious is when we start looking at real-world behaviors of sensors and components. Real resistors don’t precisely follow Ohm’s Law. Temperature sensors have non-linearities. Force transducers have very bizarre mathematical behavior. Friction is a poorly understood phenomenon and is affected by material condition and environmental parameters. Measuring things accurately and repeatably is, in general, a mysterious ongoing challenge, as many of our readers know all too well. We don’t have precise equations to model these most basic physical systems, especially once we are looking at five 9s. Things that are linear are often slightly nonlinear. Variables that are supposed to be independent are often slightly dependent. An ML-based approach to solving the problem will handle these real-world variations while a theoretical formula based on assumptions that the real world must obey will not.

A Useful Example

One illustrative application where I was able to demonstrate the true value of including a neural network in a robotic application was for a connector alignment and robotic insertion project for electronics assembly and Data Center automation. The idea was to pick up a fiber-optic network plug and insert it into the associated socket. Robotic connector insertion is typically difficult as robots lack human visual understanding and our fine motion and force following skills. Furthermore, this plug is quite long and has a high aspect ratio, and the socket has a very small entrance aperture requiring precise alignment to correctly insert without damage. Strong arming the connector into the socket with a traditional rigid robot is risky; we can use force following, a gentle cobot, a lot of compliance, and a spongy gripper to stack the deck in our favor, but the initial alignment in XYZ and Roll, Pitch, and Yaw need to start out better than 0.1mm and 1 degree.

A traditional robotic approach would be to use either 3D sensing cameras or multiple 2D cameras and calibrate those against the work cell and robot coordinate systems. We would then visually measure the orientation of the plug relative to the gripper after it has been picked up, and also find the socket location in precise 3D space. Next, we would program several very specific 3D geometric offset calculations to determine where the plug needed to be in 3D space. Finally, we would compute a robot reverse kinematic solution to determine necessary joint angles to get to the correct approach pose.

This is all standard vision-guided robotics stuff, but it is time-consuming and confusing. It is also fragile. Clean a camera lens and slightly bump it, and all the robot calibrations must be redone.

Do It With ML!

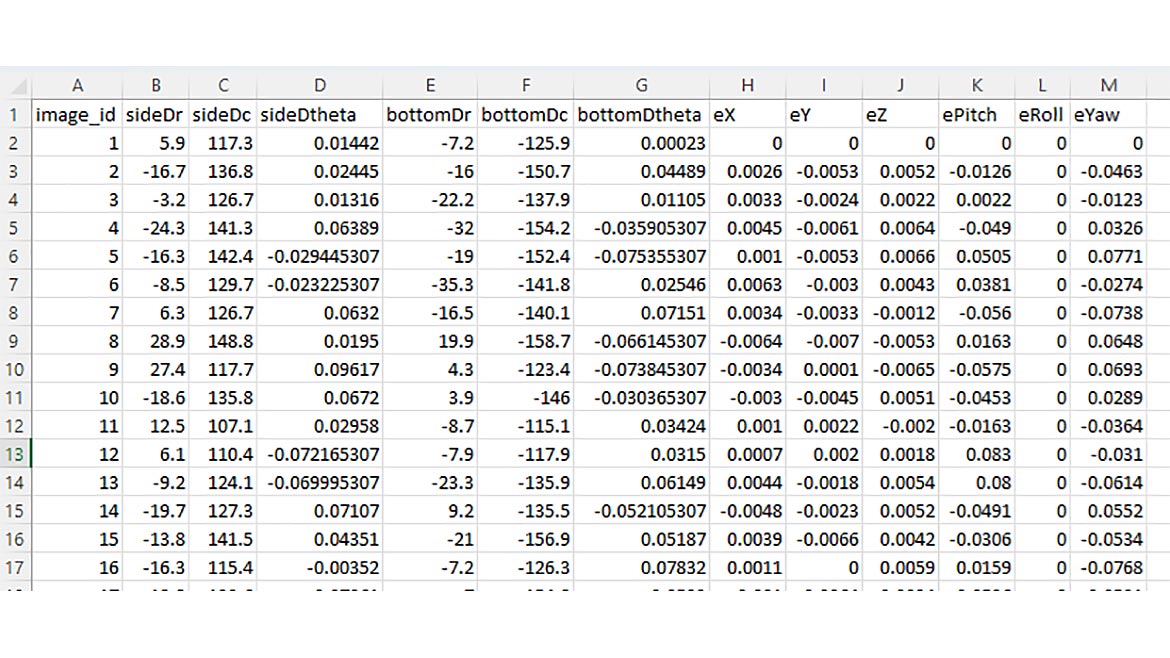

I chose a different approach that completely avoids all traditional vision/robot calibration. We’ll just measure offsets in cameras placed wherever convenient, and we’ll see how those offsets vary as we run a program to move the robot in and out of alignment. That will make a training dataset that will allow our neural network to just figure out how everything relates—linear or not, independent axes or not.

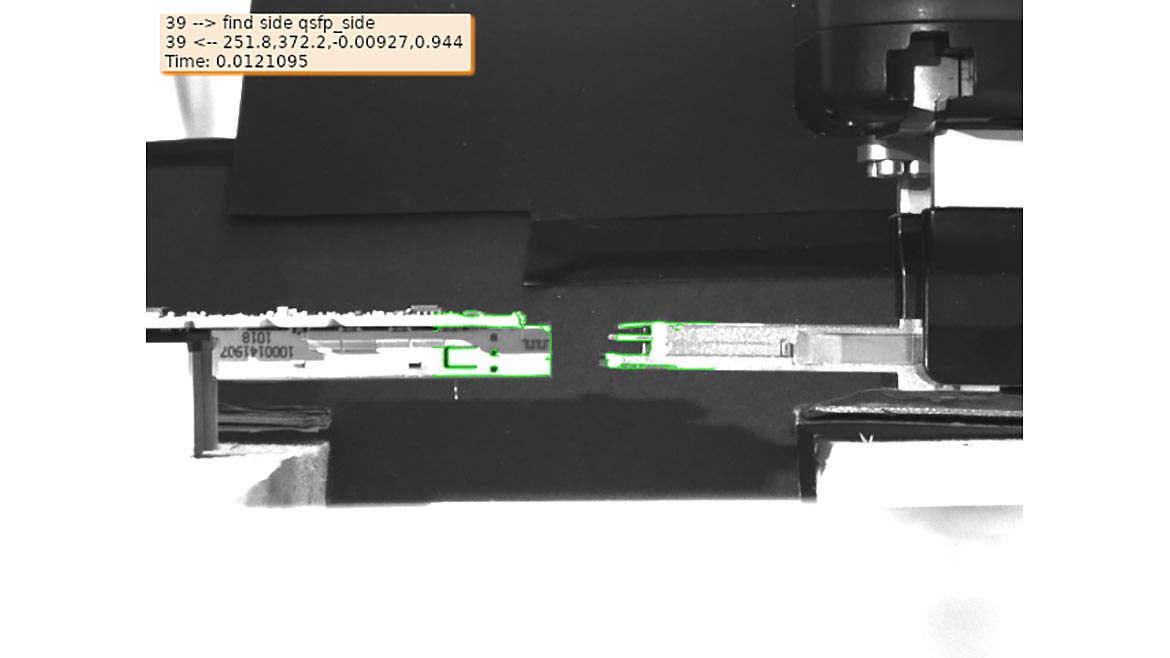

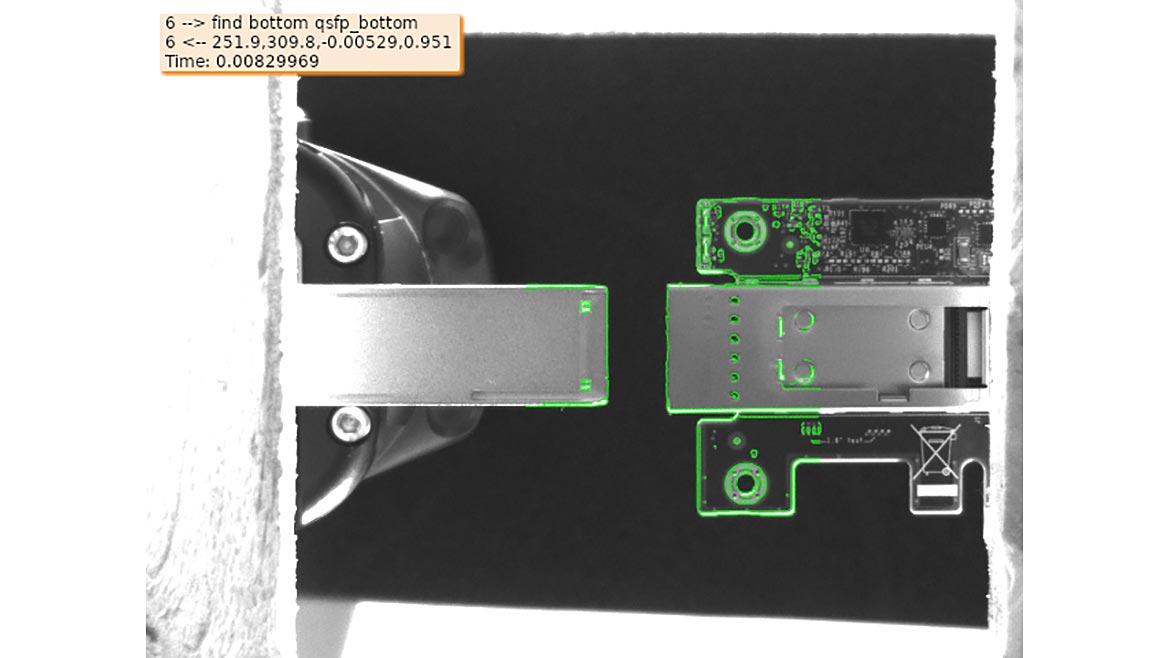

I used two 2D cameras in whatever position was easy to mount them and used standard pattern matching tools to find the socket and the plug and to measure their positional and angular offsets in uncalibrated camera coordinates.

A human-certified visual alignment of the two is made to start the calibration. Then, a simple robot program moves the plug around in all six axes and we simply measure what we see in each camera. This automatically builds a training database that correlates misalignments we see in the cameras with known plug-socket offsets in the physical world.

Feeding this data into a multidimensional linear regression machine learning algorithm should work, since this all “should” be linear. It turns out that there are strange nonlinearities in the images due to lens nonlinearities that are not calibrated. No worries! I just used a neural network instead, and it sees what we normally would call intrinsic calibration, extrinsic calibration, and hand-eye calibration automatically without needing to deep-dive or compute any of those things explicitly.

As noted earlier, neural networks don’t really care if the functions are linear, and they certainly don’t care if the variables are not independent. They are architected to assume that things may be nonlinear and that your input variables are somehow dependent on one another. I built and trained my neural network in TensorFlow and PyTorch, and also built a real-time version in C to show how easily deployment could be. The total number of lines of “ML-specific” code in the application was under 100, and you can get the bulk of them from online resources including simple Google searches, coding help websites, and ChatGPT.

What? ChatGPT??

Oops. I said it. OK, we can close with a few words on ChatGPT. This new technology is quite interesting and can be used successfully to start writing small ML programs like the ones described above. (Ask ChatGPT to “Show me how to use TensorFlow to build a neural network to convert from Fahrenheit to Celsius and prepare to be amazed.) Ideally, and typically, ChatGPT will eliminates the first hour you would have spent building boilerplate code and data structures to hold your training data. It probably will even show you how to build dummy training data that you can replace with your real data later.

What it won’t do is explain what you’re looking at, so I hope you’ve taken a course or read books and tutorials online. It also will not tune things all the way for you… the code it hands you may not work at all until you do some tweaking on data array shapes and mess with hyperparameters, standard data scientist fare. You also will need to understand what the code that you’ve copied is doing… random typing won’t get you home. But it sure can be a great starting point for difficult problems! The students in my Machine Learning for Robotics class were required to use ChatGPT for many of their assignments to try to see how far they could get and what they would have to do to finish things. Results can be mixed, but I would say you’re best off seeing what’s out there in Google Search, Stack Overflow, and ChatGPT and then try to hit the ground running with some combination of what you see rather than just starting from a blank slate and making everything with hammer-and-tongs.

Vision & Sensors

A Quality Special Section

I also teach Discrete Mathematics with Computer Applications, which is a required course for computer science majors since we cover logic, sequences, probability, and Bayes’ Theorem, a common tool used in AI. I fed my weekly quizzes, midterm tests, and the final exam through ChatGPT and graded the results. Surprisingly, ChatGPT had good days and bad days. Two very slightly modified versions of the same question would receive answers of highly different quality. The system could achieve as high as 85% score on one version of the quiz, whereas a trivially modified second version of the quiz might get a grade as low as 20% or 30%. Furthermore, not offering my typical partial credit was brutal to ChatGPT. It very frequently gets things almost right (but wrong) or gets the right answer for a provably wrong reason.

Performance on my 34-question final exam was similarly uneven. I saw excellent answers and explanations to difficult questions, and other times read excellent sounding but completely wrong answers to relatively easy problems.

But definitely use it! The same precautions have always been needed to evaluate information from Stack Overflow, Google Search, Wikipedia… or anywhere. We need to be educated enough to be able to evaluate the quality of what we’re reading. We can learn from things but need to be able to decide whether they are credible first. It’s a tenuous balance. The value of the results is proportional to our ability to understand the subject matter… do I know what I’m looking at, do I know what it means?

The student can only learn when they are ready. We can only understand the answer when we are prepared. It all sounds like something some guru might say!

But it also seems to be a new way to think about learning online, and that’s pretty exciting.