There are many hurdles that can slow technology deployment. Features and cost are always a factor, but complexity and ease-of-use are two key areas that can make or break successful deployment for an end-user. I can think back to my own recent purchase of a software tool as proof that usability is so critical but often overlooked by developers and sales teams.

Purchasing the tool made business sense. It would save our team a great deal of time by automating what was too often a cumbersome manual process. The cost wasn’t unreasonable. While I easily recognized the value of the new tool, my hesitancy stemmed back to usability. It was an incremental feature addition on a tool we were already using. Our staff were very comfortable using the current tool, and on the surface improved features added value but a steep learning curve.

Credit to the sales team, they quickly recognized my objections and focused their pitch of usability. This included a preview of a soon-to-be released beta version that addressed similar issues raised by other customers, and an in-depth session with our end-users to ensure they were comfortable with the tool.

Human nature is to resist change, especially around processes where we have gained comfort and expertise. For developers this means always considering the end-user in your product design. The machine vision market has made great strides in recognizing the role of the end-user during development. For manufacturing system developers, especially those focusing on AI for automation, usability is a key factor that will drive wide scale deployments.

Simplifying Machine Vision

As automated quality inspection migrated from research labs to factory floors throughout the early 1990s, system designers struggled with the cost, performance, and scalability concerns.

Primarily, these industrial vision systems required dedicated, heavy, and inflexible cabling to connect cameras with processing, analysis, and display platforms. Frame grabber cards were needed to capture video at endpoints, which limited design choice to larger footprint, more expensive computing platforms. Proprietary approaches to managing video made it difficult to scale multi-vendor systems and network images feeds to various endpoints.

Industrial vision evolved into a viable commercial technology in large part when it adopted GigE Vision as standard for highly reliable real-time video delivery and networking. With Ethernet, system designers could support required point-to-point connections while gaining the flexibility of video networking, the ability to interwork with a range of different computing platforms, and deploy lightweight, low-cost, commercial cabling.

These types of features brought performance and cost advantages to industrial automation and has allowed machine vision to broaden into a wider range of markets including medical imaging and security and defense. Then the industry turned to usability.

Products like external frame grabbers brought plug-and-play usability to vision system design. Connect a legacy interface to a GigE Vision or USB3 Vision frame grabber and you could receive machine vision video on your laptop or processing platform with minimal configuration required. Open standard software development kits allowed designers to connect, configure, and acquire images from cameras regardless of the vendor.

Today the vision market has gone even further to improve usability, particularly for products with a manufacturing end-user focus. New software development platforms employ low-code tools that allow any user to develop their own vision and AI applications and workflows for automated inspection. Integrated templates for common vision requirements, such as measurement and edge detection, are complemented by customizable AI models for object detection, character recognition, classification and more.

In addition, with an open architecture approach end-users can import open source and third-party vision models that can be customized for deployment. An end-user with limited development skills can use the drag-and-drop platform to build an AI or vision workflow using integrated templates and simple annotation tools for AI to have an application ready for testing in hours instead of days.

As technology gets easier to use, vision and AI application design is no longer restricted to expert-level developers. A quality manager or IT operations staff can design, train, and deploy their own customizable workflow. In the cost-conscious manufacturing market, internal staff are now owning and managing workflows and customizations that once required expensive consulting or integration support.

Streamlining Smart Device Design for Usability

Similarly, in the smart camera market software is making it both easier to develop and use devices. Typically, smart-enabled cameras, sensors and embedded devices require dedicated AI development skills but still support a limited set of very application-specific functions. Each new application then requires extensive development and training.

Instead, designers can leverage a low-code, template based and open architecture platform to design smart applications that are more easily transferred across different devices. For example, with minimal modifications the same application can be used from high-end to price-sensitive devices.

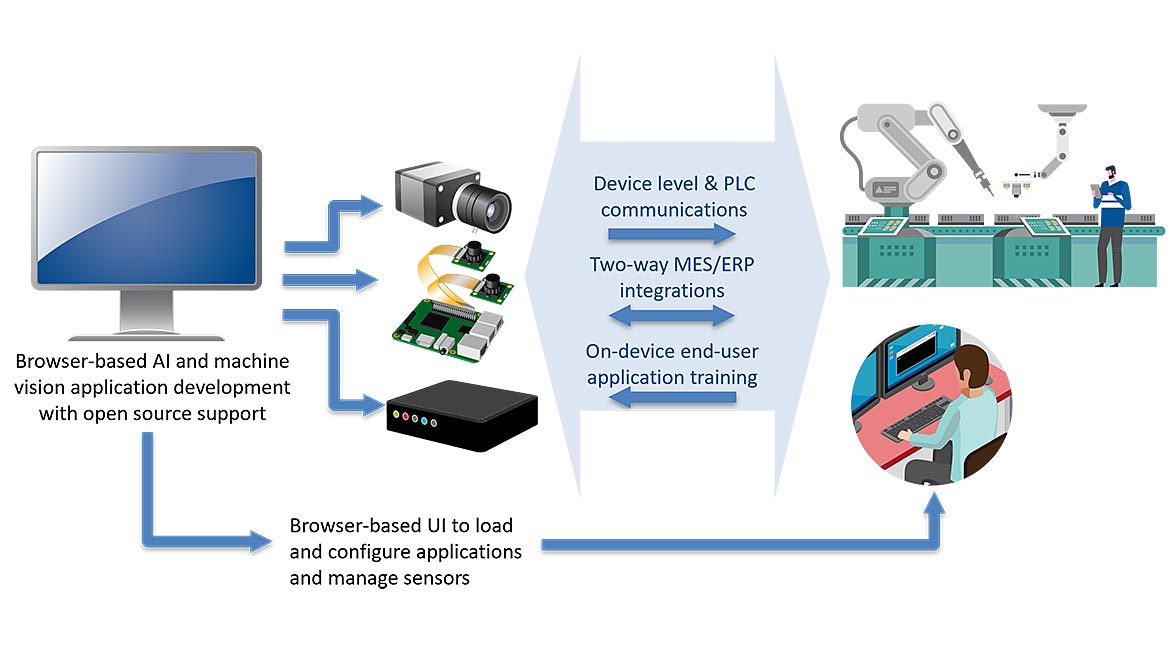

For usability, development packages are integrated with a brandable user interface (UI) that makes it easier for the end-user to connect, configure, and train the device. UI design is often outside of the realm of expertise for a device developer, and as a result many solutions on the market today are difficult to deploy for a non-vision expert. Software tools from the vision market that streamline connectivity and image acquisition, as well as factory floor integrations, are also now built into these new smart devices.

Traditionally development time for a smart device, including application development, testing and validation, and UI considerations could consume over 500 hours of resources. In comparison new software platforms can cut that time in half.

The end-user then receives a device with an intuitive UI that makes it easier for them to train their own vision and AI applications, versus requiring more costly customization. With built-in image acquisition and factory system integrations to simplify control and decision-based system level communications, including MES/ERP and PLC connectivity, it’s then easier to deploy solutions in production. An open standard approach means end-users can deploy new smart devices in existing infrastructure, or if they’re designing a new system choose the components best suited to the application.

Turnkey Vision for the Shopfloor

One area where vision and AI can play a substantial role in reducing manufacturing costs and risk is around manual and semi-automated processes. Despite all the advances in manufacturing automation, about 70 percent of production processes in North America still rely on human decisions.

Humans excel at distinguishing subtle flaws, variations or differences and can adjust when faced with unpredictability. We are also easily trained, learn by example, and can adapt for new products or requirements. However, our eyes can be easily tricked, and unlike machines we get bored and distracted. As a result, most manual inspection tasks typically exhibit error rates of 20-30 percent, primarily due to human error. Typically, these errors are related to missing a defect, incorrect assembly, or a “false positive” where an operator identifies an issue that does not exist.

Vision & Sensors

A Quality Special Section

To aid human decision-making, new turnkey solutions are integrating AI and vision inspection and traceability tools, processing, lighting, and machine vision cameras. Built on a similar development platform as those for automated inspection and smart camera design, AI and vision app templates provide a quick start guide for a quality manager or operations staff to design their own customized workflow. This includes visual inspection tools to help a human operator spot a defect, and traceability to capture product images and operator notes for compliance requirements.

When these solutions are seamless for the end-operator, and fit within current processes and work environments, they can deliver impressive results. In an electronics assembly application, a visual inspection app integrating AI-based object detection has reduced final inspection of count and placement of components from as long as 10 minutes to less than 30 seconds.

As the manufacturer, and maybe more important its operators, gains comfort with the technology they plan on expanding visual inspection with guided assembly instructions to eliminate errors at the source. Focusing on usability, these can be visually guided instructions that clearly show an operator the next step for assembly and incorporate automated visual inspection to verify each process is completed successfully.

Easier for Users

Technology is great when it’s easy to use. There’s nothing more frustrating for an end-user than facing a hurdle that’s maybe solved in 500 pages of documentation.

The vision industry has made great strides solving design and usability issues, and as a result the market for its technology continues to grow at a rapid pace. We’re still in the early stages of AI for manufacturing, but if the industry follows a similar path of ensuring solutions can be easily trained and deployed by quality managers and operations staff there’s tremendous opportunity to improve processes and lower costs on the shop floor.